【每日一博】PostgreSQL的 Slony-I 数据同步实践

官方文档:

slony-I

系统要求: http://slony.info/documentation/requirements.html

配置与安装: http://slony.info/documentation/administration.html#INSTALLATION

使用配置: http://slony.info/documentation/tutorial.html#FIRSTDB

环境:

主库:centos linux 32bit虚拟机,ip为192.168.100.240

PostgreSQL9.2.13

Slony-I 2.2

备库: centos linux 32bit虚拟机, ip为192.168.100.241

PostgreSQL9.2.13

Slony-I 2.2

1.源码编译安装PostgreSQL

在主库和备库都,进行源码编译、安装、配置PostgreSQL数据库如下:

源码编译安装PostgbreSQL9.2。

安装目录:/opt/pgsql2

源码安装完毕后,要配置pg_hba.conf和postgresql.conf确保主、备库可以远程访问。

注意: PG版本要必须是Slony支持的版本,详见 http://slony.info/documentation/requirements.html 。

2.准备slony-I复制的主、备库

2.1声明环境变量

在主库和备库都,执行:

su - postgres export CLUSTERNAME=lyy_cluster1 export MASTERDBNAME=masterdb export SLAVEDBNAME=slavedb export MASTERHOST=192.168.13.128 export SLAVEHOST=192.168.100.236 export REPLICATIONUSER=postgres

注意:

1.REPLICATIONUSER通常是 PostgreSQL 数据库的超级用户。

2.修改MASTERHOST 和 SLAVEHOST时,尽量不要使用l ocalhost,因为可能会导致错误:ERROR remoteListenThread_1: db_getLocalNodeId() returned 2 - wrong database?。

2.2根据环境变量准备数据库

在主库和备库都,根据环境变量来创建相应对象:

--在主服务器中 cd /opt/pgsql2/bin ./createuser -p 5432 -U postgres -SRD -P $PGBENCHUSER --若已创建则无需再创建 input password for new user :(此处输入新用户的密码即可) input password again:(此处输入新用户的密码即可) password:(此处输入超级用户postgres的密码即可) ./createdb -p 5432 -U postgres -O $PGBENCHUSER -h $MASTERHOST $MASTERDBNAME password:(此处输入超级用户postgres的密码即可)

--在备用服务器中 cd /opt/pgsql2/bin ./createuser -p 5432 -U postgres -SRD -P $PGBENCHUSER --若已创建则无需再创建 input password for new user :(此处输入新用户的密码即可) input password again:(此处输入新用户的密码即可) password:(此处输入超级用户postgres的密码即可) ./createdb -p 5432 -U postgres -O $PGBENCHUSER -h $SLAVEHOST $SLAVEDBNAME password:(此处输入超级用户postgres的密码即可)

--在主服务器,创建要同步的数据表(数据表必须有主键,才能通过slony-i实现数据同步) [postgres@localhost bin]$ ./psql -U $PGBENCHUSER -h $MASTERHOST -d $MASTERDBNAME psql (9.2.13) Type "help" for help.. masterdb=# CREATE TABLE lyy(id int primary key, name varchar); CREATE TABLE

2.3创建pl/pgsql过程语言

Slony-I 需要数据库有 pl/pgSQL 过程语言,如果模板数据 template1已经安装了 pl/pgSQL,那么新建的 $MASTERDBNAME也就也有了pl/pgSQL,如果已经存在了,则不需要执行以下语句:

在主库和备库都执行:

--在bin目录下 ./createlang -h $MASTERHOST plpgsql $MASTERDBNAME

2.4手动从主库将表定义导入备库

当备库Slony-I 订阅主库之后,Slony-I不能自动从主库拷贝表定义到备库,所以我们需要把表定义从主库导入备库,我们通过 pg_dump来实现表定义的主、备同步:

--把在pgbench数据库建好的表同步到pgbenchslave数据库中: --在主服务器中执行dump,然后将执行结果拷贝到备用服务器中执行,即可实现同步 --在bin目录下 [postgres@localhost bin]$ ./pg_dump -s -U postgres -h 192.168.100.240 masterdb | ./psql -U postgres -h 192.168.100.241 slavedb

3.源码编译安装Slony-I

在主库和备库都,按照以下步骤安装slony-i。

slony下载地址: http://slony.info/downloads/2.2/source/

下载并解压slony-2.2,然后进行编译配置安装,要确保每一步安装正确后再进行下一步。

cd ../slony-2.2 ./configure --with-pgconfigdir=/opt/pgsql944/bin --with-perltools gmake all gamke install

注意:如果slony-I后面的配置过程使用altperl脚本,则configure时必须指定--with-perltools。

4. 配置 slony并启动同步复制

4.0配置并启用slony同步复制的理论基础(仅供理解):

Configuring the Database For Replication.

Creating the configuration tables, stored procedures, triggers and configuration is all done through the slonik tool. It is a specialized scripting aid that mostly calls stored procedures in the master/slave (node) databases.

The example that follows uses slonik directly (or embedded directly into scripts). This is not necessarily the most pleasant way to get started; there exist tools for building slonik scripts under the tools directory, including:

-

Section 6.1.1 - a set of Perl scripts that build slonik scripts based on a single slon_tools.conf file.

-

Section 6.1.2 - a shell script ( e.g. - works with Bash) which, based either on self-contained configuration or on shell environment variables, generates a set of slonik scripts to configure a whole cluster.

以下两种方法省略详细介绍,详见文档 http://slony.info/documentation/tutorial.html#FIRSTDB 。

方法一: Using slonik Command Directly

方法二: Using the altperl Scripts

扩展至: http://file:///C:/Users/Yuanyuan/Desktop/slony1-2.2.4/doc/adminguide/additionalutils.html#ALTPERL

4.1直接使用slonik命令(以上方法一)

在主库配置slony cluster

在主库,创建并执行slony_setup.sh文件,实现slony cluster的配置:

[postgres@localhost data]$ vi slony_setup.sh

CLUSTERNAME=lyy_cluster1 MASTERDBNAME=masterdb SLAVEDBNAME=slavedb MASTERHOST=192.168.100.240 SLAVEHOST=192.168.100.241 REPLICATIONUSER=postgres /opt/pgsql92/bin/slonik <<_EOF_ #-- # define the namespace the replication system # uses in our example it is slony_example #-- cluster name = $CLUSTERNAME; #-- # admin conninfo's are used by slonik to connect to # the nodes one for eachnode on each side of the cluster, # the syntax is that of PQconnectdb in # the C-API # -- node 1 admin conninfo = 'dbname=$MASTERDBNAME / host=$MASTERHOST user=$REPLICATIONUSER'; node 2 admin conninfo = 'dbname=$SLAVEDBNAME / host=$SLAVEHOST user=$REPLICATIONUSER'; #-- # init the first node. Its id MUST be 1. This creates # the schema _$CLUSTERNAME containing all replication # system specific database objects. #-- init cluster ( id=1, comment = 'Master Node'); #-- # Slony-I organizes tables into sets. The smallest unit # a node can subscribe is a set. The master or origin of # the set is node 1. #-- create set (id=1, origin=1, comment='All masterdb tables'); set add table (set id=1, origin=1, id=1, fully qualified name = 'public.lyy', comment='lyy table'); # set add sequence (set id=1, origin = 1, id = 1, # fully qualified name = 'public.t1_id_seq', # comment = 't1 id sequence'); #-- # Create the second node (the slave) tell the 2 nodes how # to connect to each other and how they should listen for events. #-- store node (id=2, comment = 'Slave Node', event node=1); store path (server = 1, client = 2, conninfo='dbname=$MASTERDBNAME / host=$MASTERHOST user=$REPLICATIONUSER'); store path (server = 2, client = 1, conninfo='dbname=$SLAVEDBNAME / host=$SLAVEHOST user=$REPLICATIONUSER'); _EOF_

[postgres@localhost data]$sh slony_setup.sh

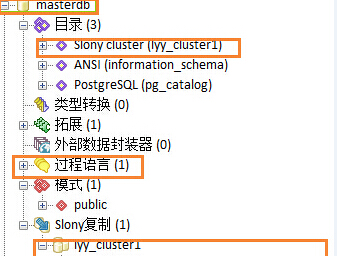

注释:本步执行完毕后,初始化完毕一个名为lyy_cluster1的slony集群。

并相应的产生一个名为_lyy_cluster1 的模式,里面含有slony运行所需的配置表、序列、函数、触发器等(主要是通过slony-i安装过程中在/opt/pgsql92/share下生成的 slony1_base.2.2.4.sql 和slony1_funcs.2.2.4.sql)。

还会在同步表lyy下创建相应的触发器。

在主库:

masterdb=# /d lyy Table "public.lyy" Column | Type | Modifiers --------+-------------------+----------- id | integer | not null name | character varying | Indexes: "lyy_pkey" PRIMARY KEY, btree (id) Triggers: _lyy_cluster1_logtrigger AFTER INSERT OR DELETE OR UPDATE ON lyy FOR EACH ROW EXECUTE PROCEDURE _lyy_cluster1.logtrigger('_lyy_cluster1', '1', 'k') _lyy_cluster1_truncatetrigger BEFORE TRUNCATE ON lyy FOR EACH STATEMENT EXECUTE PROCEDURE _lyy_cluster1.log_truncate('1') _lyy_cluster_logtrigger AFTER INSERT OR DELETE OR UPDATE ON lyy FOR EACH ROW EXECUTE PROCEDURE _lyy_cluster.logtrigger('_lyy_cluster', '1', 'k') _lyy_cluster_truncatetrigger BEFORE TRUNCATE ON lyy FOR EACH STATEMENT EXECUTE PROCEDURE _lyy_cluster.log_truncate('1') Disabled triggers: _lyy_cluster1_denyaccess BEFORE INSERT OR DELETE OR UPDATE ON lyy FOR EACH ROW EXECUTE PROCEDURE _lyy_cluster1.denyaccess('_lyy_cluster1') _lyy_cluster1_truncatedeny BEFORE TRUNCATE ON lyy FOR EACH STATEMENT EXECUTE PROCEDURE _lyy_cluster1.deny_truncate() _lyy_cluster_denyaccess BEFORE INSERT OR DELETE OR UPDATE ON lyy FOR EACH ROW EXECUTE PROCEDURE _lyy_cluster.denyaccess('_lyy_cluster') _lyy_cluster_truncatedeny BEFORE TRUNCATE ON lyy FOR EACH STATEMENT EXECUTE PROCEDURE _lyy_cluster.deny_truncate()

在备库:

slavedb=# /d lyy Table "public.lyy" Column | Type | Modifiers --------+-------------------+----------- id | integer | not null name | character varying | Indexes: "lyy_pkey" PRIMARY KEY, btree (id) Triggers: _lyy_cluster1_denyaccess BEFORE INSERT OR DELETE OR UPDATE ON lyy FOR EACH ROW EXECUTE PROCEDURE _lyy_cluster1.denyaccess('_lyy_cluster1') _lyy_cluster1_truncatedeny BEFORE TRUNCATE ON lyy FOR EACH STATEMENT EXECUTE PROCEDURE _lyy_cluster1.deny_truncate() Disabled triggers: _lyy_cluster1_logtrigger AFTER INSERT OR DELETE OR UPDATE ON lyy FOR EACH ROW EXECUTE PROCEDURE _lyy_cluster1.logtrigger('_lyy_cluster1', '1', 'k') _lyy_cluster1_truncatetrigger BEFORE TRUNCATE ON lyy FOR EACH STATEMENT EXECUTE PROCEDURE _lyy_cluster1.log_truncate('1')

在主库启动主库节点及监视器

在主库,执行以下命令启动slon daemon:

[postgres@localhost bin]$./slon lyy_cluster1 "dbname=masterdb user=postgres host=192.168.100.240"&

执行当前命令的终端会不断地返回检测信息,所以该终端不要关闭也不要再执行其他操作。

在备库启动备库节点及监视器

在备库。执行以下命令启动slon deamon:

[postgres@localhost bin]$./slon slony_example "dbname=slavedb user=postgres host=192.168.100.241" &

执行当前命令的终端会不断地返回检测信息,所以该终端不要关闭也不要再执行其他操作。

在主库执行备库订阅主库

在主库,执行订阅过程, 通过脚本文件subscribe_set.sh来执行订阅过程:

[postgres@localhost data]$ vi subscribe_set.sh

CLUSTERNAME=lyy_cluster1 MASTERDBNAME=masterdb SLAVEDBNAME=slavedb MASTERHOST=192.168.100.240 SLAVEHOST=192.168.100.241 REPLICATIONUSER=postgres /opt/pgsql92/bin/slonik <<_EOF_ # ---- # This defines which namespace the replication system uses # ---- cluster name = $CLUSTERNAME; # ---- # Admin conninfo's are used by the slonik program to connect # to the node databases. So these are the PQconnectdb arguments # that connect from the administrators workstation (where # slonik is executed). # ---- node 1 admin conninfo = 'dbname=$MASTERDBNAME host=$MASTERHOST / user=$REPLICATIONUSER'; node 2 admin conninfo = 'dbname=$SLAVEDBNAME host=$SLAVEHOST / user=$REPLICATIONUSER'; # ---- # Node 2 subscribes set 1 # ---- subscribe set ( id = 1, provider = 1, receiver = 2, forward = no); _EOF_

[postgres@localhost data]$sh subscribe_set.sh

目前,本次数据库的slony的同步复制已经配置完毕。

验证slony-I同步复制生效

在主库,向表lyy插入数据:

[postgres@localhost bin]$ ./psql -U postgres -d masterdb psql (9.2.13) Type "help" for help. masterdb=# insert into lyy values(1,'lyy'); INSERT 0 1

在备库,查询表lyy中的数据情况:

postgres@localhost bin]$ ./psql -U postgres -d slavedb psql (9.2.13) Type "help" for help. slavedb=# select * from lyy; id | name ----+------ (0 rows) slavedb=# select * from lyy; id | name ----+------ 1 | lyy (1 row)

可以在主库执行增删操作,然后在备库执行查询操作,进行比对。

4.2使用altperl脚本配置并启动(以上方法二):

配置slony_tool.conf并初始化slony集群

默认情况下,slony的配置文件样本已默认安装至/usr/local/etc/ slon_tools.conf-sample。

cd /usr/local/etc --复制一个slon_tools.conf-sample,命名为slon_tools.conf [root@localhost etc]#cp slon_tools.conf-sample /usr/local/etc/slon_tools.conf --开始编辑slon_tools.conf [root@localhost etc]#vi slon_tools.conf

配置详情:

#修改CLUSTER_NAME为前面我们设置的 # The name of the replication cluster. This will be used to # create a schema named _$CLUSTER_NAME in the database which will # contain Slony-related data. $CLUSTER_NAME = 'lyy_cluster1'; #slony的pid文件目录。如果没有,则根据提示创建,并授予要求的权限。 # The directory where Slony store PID files. This # directory will need to be writable by the user that invokes # Slony. $PIDFILE_DIR = '/var/run/slony1'; #日志文件存储目录,如果没有,则修改配置或者根据配置创建目录。 # The directory where Slony should record log messages. This # directory will need to be writable by the user that invokes # Slony. $LOGDIR = '/var/log/slony1'; #修改节点信息为我们的主、备节点的信息,样本中提供的多出的节点可以注释或者删除掉。 # Include add_node lines for each node in the cluster. Be sure to # use host names that will resolve properly on all nodes # (i.e. only use 'localhost' if all nodes are on the same host). # Also, note that the user must be a superuser account. add_node(node => 1, host => '162.168.100.240', dbname => 'masterdb', port => 5432, user => 'postgres', password => 'postgres'); add_node(node => 2, host => '192.168.100.241', dbname => 'slavedb', port => 5432, user => 'postgres', password => 'postgres'); #修改要同步的表或者序列 # This array contains a list of tables that already have primary keys. "pkeyedtables" => [ 'public.lyy', ], #如果没有序列和无主键表需要同步,则将以下示例注释掉。 # For tables that have unique not null keys, but no primary # key, enter their names and indexes here. #lyy comment this # "keyedtables" => { # 'table3' => 'index_on_table3', # 'table4' => 'index_on_table4', # }, # Sequences that need to be replicated should be entered here. # lyy comment this # "sequences" => ['sequence1', # 'sequence2', # ], },

#在主库,初始化slony cluster [root@localhost etc]# slonik_init_cluster | /opt/pgsql92/bin/slonik <stdin>:10: Set up replication nodes <stdin>:13: Next: configure paths for each node/origin <stdin>:16: Replication nodes prepared <stdin>:17: Please start a slon replication daemon for each node

注释:同样的,本步执行完毕后,初始化完毕一个名为lyy_cluster1的slony集群。

并相应的产生一个名为_lyy_cluster1 的模式,里面含有slony运行所需的配置表、序列、函数、触发器等(主要是通过slony-i安装过程中在/opt/pgsql92/share下生成的 slony1_base.2.2.4.sql 和slony1_funcs.2.2.4.sql)。

启动slon并进行数据集合订阅:

# 在主库,启动节点1的slon [root@localhost etc]# slon_start 1 Invoke slon for node 1 - /opt/pgsql92/bin//slon -p /var/run/slony1/lyy_cluster2_node1.pid -s 1000 -d2 lyy_cluster1 'host=192.168.100.240 dbname=master user=postgres port=5432 password=postgres' > /var/log/slony1/node1/master-2015-09-15.log 2>&1 & Slon successfully started for cluster lyy_cluster1, node node1 PID [7839] Start the watchdog process as well...

# 在备库,启动节点2的slon [root@localhost etc]# slon_start 2 Invoke slon for node 2 - /opt/pgsql92/bin//slon -p /var/run/slony1/cluster1_node2.pid -s 1000 -d2 cluster1 'host=192.168.100.241 dbname=slavedb user=postgres port=5432 password=postgres' > /var/log/slony1/node2/slavedb-2015-09-15.log 2>&1 & Slon successfully started for cluster lyy_cluster1, node node2 PID [7613] Start the watchdog process as well...

# 在主库,创建数据集合 (此处 1 是一个 set 集合号) [root@localhost etc]# slonik_create_set 1 | /opt/pgsql92/bin/slonik <stdin>:11: Subscription set 1 (set1_name) created <stdin>:12: Adding tables to the subscription set <stdin>:16: Add primary keyed table public.lyy <stdin>:19: Adding sequences to the subscription set <stdin>:20: All tables added

# 在主库,订阅 集合1 到 节点2 (1= set ID, 2= node ID) [root@localhost etc]# slonik_subscribe_set 1 2 | /opt/pgsql92/bin/slonik <stdin>:6: Subscribed nodes to set 1

目前,本次数据库的slony的同步复制已经配置完毕。

验证slony-I同步复制生效

在主库,向表lyy插入数据:

[postgres@localhost bin]$ ./psql -U postgres -d masterdb psql (9.2.13) Type "help" for help. masterdb=# insert into lyy values(1,'lyy'); INSERT 0 1

在备库,查询表lyy中的数据情况:

postgres@localhost bin]$ ./psql -U postgres -d slavedb psql (9.2.13) Type "help" for help. slavedb=# select * from lyy; id | name ----+------ (0 rows) slavedb=# select * from lyy; id | name ----+------ 1 | lyy (1 row)

可以在主库执行增删操作,然后在备库执行查询操作,进行比对。

5.Slony-I的其他操作

slony的switchover操作(也就是把主节点改成从节点,从节点升级为主节点):

slonik_move_set set1 node1 node2 | /opt/pgsql92/bin/slonik

slony的failver操作:

slonik_failover node1 node2 | /opt/pgsql92/bin/slonik

参考资料:

http://www.cnblogs.com/gaojian/p/3196244.html

http://blog.chinaunix.net/uid-15145533-id-2775796.html

也可以只用pgbench来测试slony-i的数据同步。

pgbench使用: http://www.postgresql.org/docs/9.2/static/pgbench.html

![[HBLOG]公众号](https://www.liuhaihua.cn/img/qrcode_gzh.jpg)