关于被动式扫描的碎碎念

0x00 简介

分布式扫描好多人都写过,例如:

burp的sqli插件

Matt前辈的 http://zone.wooyun.org/content/24172

猪猪侠前辈的 http://zone.wooyun.org/content/21289

Ver007前辈的 http://zone.wooyun.org/content/24333

0x_Jin前辈的 http://zone.wooyun.org/content/24341

填上个坑填的心烦,想着也造个轮子,忙活了几天,写了一个简单的雏形

Github: https://github.com/liuxigu/ScanSqlTestchromeExtensions

在此感谢bstaint、sunshadow的帮助

Sqlmapapi本来就是为了实现分布式注入写的,在被动扫描的基础上 加节点就实现分布式了

最初想的是用chrome插件来实现代码注入

- 用js来获取

<a>标签的同域url,用js是防止一些站的反爬虫措施,还有对于a href指向相对链接的的情况,用js会自动补全域名. - Chrome webRequest API OnBeforeRequest获取即将请求的url

设想获取url后 喂给sqlmapapi, 将能注入的url写入到文本里,js 的 FileSystemObject gg.. 本来是准备用php实现文件io的…

talk is cheap show me the code.

0x01 Chrome manifest.json

#!js { "name": "sqlInjectionTest", "version": "0.1", "description": "you know...", "manifest_version": 2, "content_scripts": [{ "matches":["*://*/*"], "js": ["inject.js"] }], "permissions": [ "*://*/*", "webRequest", "webRequestBlocking" ], "browser_action": { "default_icon": "icon.png" , "default_title": "scan url inject" } } 0x02 Sqlmapapi.py code

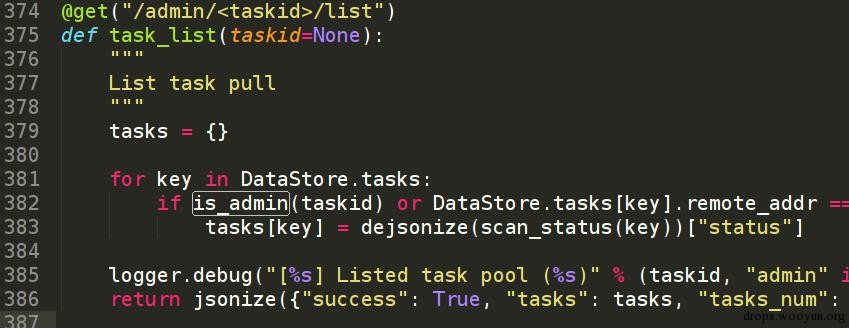

一:固定Admin Id

Sqlmapapi启动后 是这样子:

#!bash root@kali:~/桌面/sqlmap# python sqlmapapi.py -s [22:02:17] [INFO] Running REST-JSON API server at '127.0.0.1:8775'.. [22:02:17] [INFO] Admin ID: 7c4be58c7aab5f38cb09eb534a41d86b [22:02:17] [DEBUG] IPC database: /tmp/sqlmapipc-5JVeNo [22:02:17] [DEBUG] REST-JSON API server connected to IPC database AdminID每次都会变,这样导致任务管理不方便,我们更改一下sqlmap的源码

定位到 /sqlmap/lib/utils/api.py 的server函数

看到644行的os.urandom,直接改成一个固定字符串就行了

例如 我改成了 DataStore.admin_id = hexencode('wooyun')

以后就固定是Admin ID: 776f6f79756e

还有个更简单的办法

return True

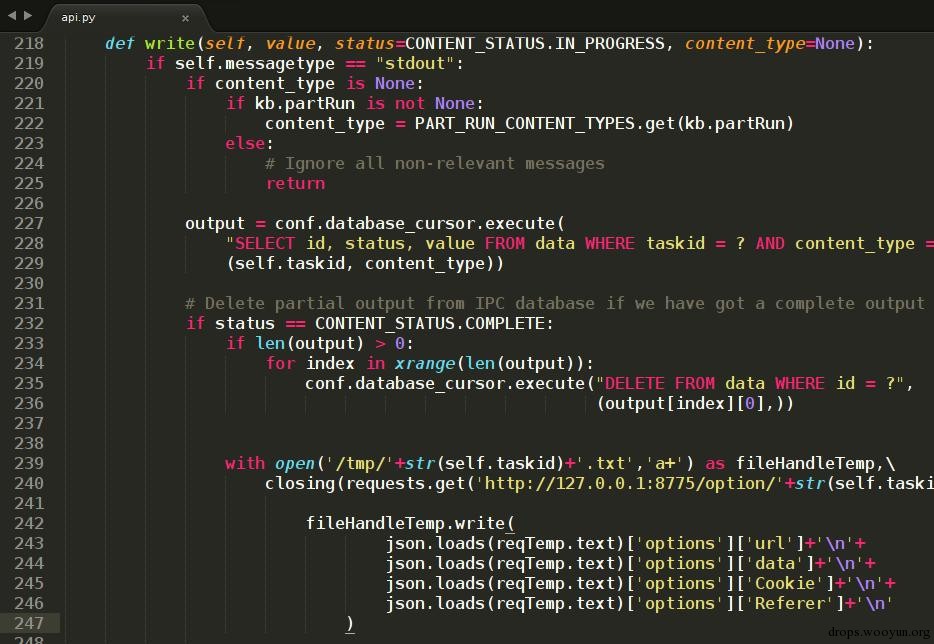

二:sqlmap扫描任务结束自动写入文本

判断当前任务是否扫描完成 访问 http://127.0.0.1:8775/admin/ss/list

#!python { "tasks": { "4db4e3bd4410efa9": "terminated" }, "tasks_num": 1, "success": true } Terminated代表任务已终止,

http://127.0.0.1:8775/scan/4db4e3bd4410efa9/data

#!python { "data": [], "success": true, "error": [] } “data”存放了sqlmapapi检测时用的payload, “data”非空就代表当前任务是可注入的,sqlmapapi并没有自带回调方式…轮询浪费开销,这里我选择修改源码

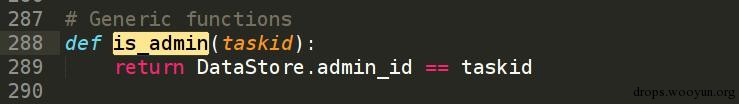

定位到 scan_data 函数 ,可以看到,假如可注入,就会从data表检索数据并写入到 json_data_message ,表名为data, 代码向上翻,定位到将数据入库的代码

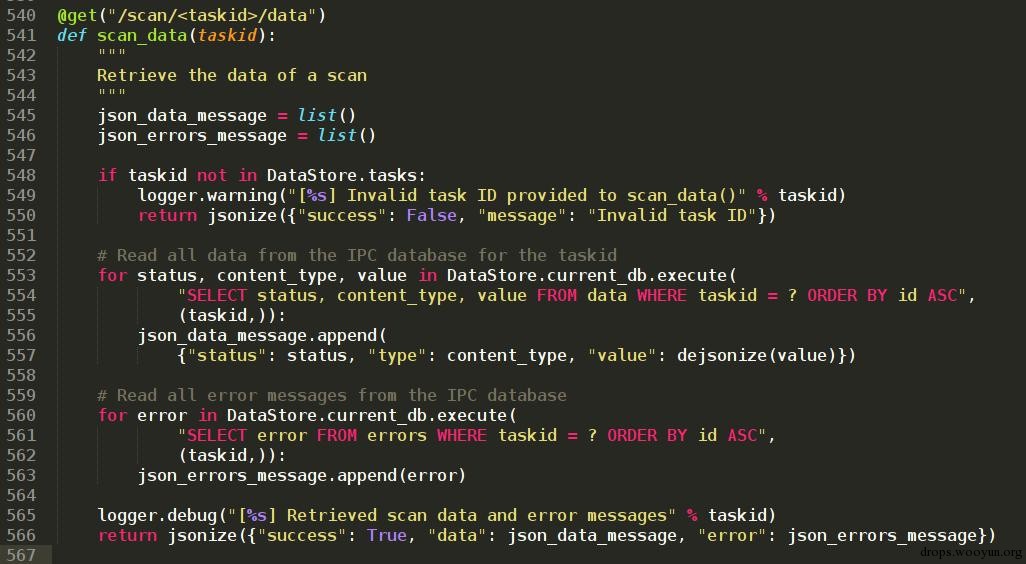

在StdDbOut类里,第230行,在insert前 插入

#!python with open('/tmp/'+str(self.taskid)+'.txt','a+') as fileHandleTemp,/ closing(requests.get('http://127.0.0.1:8775/option/'+str(self.taskid)+'/list', stream=True)) as reqTemp: fileHandleTemp.write( json.loads(reqTemp.text)['options']['url']+'/n'+ json.loads(reqTemp.text)['options']['data']+'/n'+ json.loads(reqTemp.text)['options']['Cookie']+'/n'+ json.loads(reqTemp.text)['options']['Referer']+'/n' ) 记得加载三个模块

#!python import json import requests from contextlib import closing 本意是获取能注入的url写入到文本里,在源码里没找到继承这个类的地方…懒得去找了

访问 http://127.0.0.1:8775/option/id/list

#!python Response: { "options": { ...... "url": http://58.59.39.43:9080/wscgs/xwl.do?smid=02&bgid=01&bj=8 …… } "success":{ ... }

0x03 inject.js code

1.

那么要过滤掉javascript::伪协议和无sql操作的href

看到有这样写的:

#!js if re.match('^(javascript|:;|#)',_url) or _url is None or re.match('.(jpg|png|bmp|mp3|wma|wmv|gz|zip|rar|iso|pdf|txt|db)$',_url): 甚至这样的:

#!js filename=urlpath[i+1:len(urlpath)] print "Filename: ",filename res=filename.split('.') if(len(res)>1): extname=res[-1] ext=["css","js","jpg","jpeg","gif","png","bmp","html","htm","swf","ico","ttf","woff","svg","cur","woff2"] for blacklist in ext: if(extname==blacklist): return False 这两种方式假如遇到这样的url: http://xxx/aaa/ ,就会造成无意义的开销.

判断是否可以进行get注入测试,其实只需要

str.match(/[/?]/); 无get参数的页面会返回null

我们这样写: /http(s)?://// ([/w/W-]+//)+ ([/w/W]+/?)+/;

再加上同域过滤,js中没有php那样可以在字符串中用{}引用变量的值,要在正则中拼接变量需要用RegExp对象:

#!js var urlLegalExpr="http(s)?:////"+document.domain+"([/////w//W]+//?)+"; var objExpr=new RegExp(urlLegalExpr,"gi"); 2.

Js是在http response后执行的,要进行post注入,必须在OnBeforeRequest之前获取,chrome提供了相关的api,这个没什么可说的 ,看代码吧

inject.js code:

#!js main(); function main(){ var urlLegalExpr="http(s)?:////"+document.domain+"([/////w//W]+//?)+"; var objExpr=new RegExp(urlLegalExpr,"gi"); urlArray=document.getElementsByTagName('a'); for(i=0;i<urlArray.length;i++){ if(objExpr.test(urlArray[i].href)){ sqlScanTest(urlArray[i].href); } } } function sqlScanTest(url,payload){ sqlmapIpPort="http://127.0.0.1:8775"; var payload=arguments[1] ||'{"url": "'+url+'","User-Agent":"wooyun"}'; Connection('GET',sqlmapIpPort+'/task/new','',function(callback){ var response=JSON.parse(callback); if(response.success){ Connection('POST',sqlmapIpPort+'/scan/'+response.taskid+'/start',payload,function(callback){ var responseTemp=JSON.parse(callback); if(!responseTemp.success){ alert('url send to sqlmapapi error'); } } ) } else{ alert('sqlmapapi create task error'); } } ) } function Connection(Sendtype,url,content,callback){ if (window.XMLHttpRequest){ var xmlhttp=new XMLHttpRequest(); } else{ var xmlhttp=new ActiveXObject("Microsoft.XMLHTTP"); } xmlhttp.onreadystatechange=function(){ if(xmlhttp.readyState==4&&xmlhttp.status==200) { callback(xmlhttp.responseText); } } xmlhttp.open(Sendtype,url,true); xmlhttp.setRequestHeader("Content-Type","application/json"); xmlhttp.send(content); } function judgeUrl(url){ var objExpr=new RegExp(/^http(s)?:////127/.0/.0/.1/); return objExpr.test(url); } var payload={}; chrome.webRequest.onBeforeRequest.addListener( function(details){ if(details.method=="POST" && !judgeUrl(details.url)){ var saveParamTemp=""; for(var i in details.requestBody.formData){ saveParamTemp+="&"+i+"="+details.requestBody.formData[i][0]; } saveParamTemp=saveParamTemp.replace(/^&/,''); //console.log(saveParamTemp); payload["url"]=details.url; payload["data"]=saveParamTemp; } //console.log(details); }, {urls: ["<all_urls>"]}, ["requestBody"]); chrome.webRequest.onBeforeSendHeaders.addListener( function(details) { if(details.method=="POST" && !judgeUrl(details.url)){ //var cookieTemp="",uaTemp="",refererTemp=""; for(var ecx=0;ecx<details.requestHeaders.length;ecx++){ switch (details.requestHeaders[ecx].name){ case "Cookie": payload["Cookie"]=details.requestHeaders[ecx].value; break; case "User-Agent": payload["User-Agent"]=details.requestHeaders[ecx].value; break; case "Referer": payload["Referer"]=details.requestHeaders[ecx].value; break; default: break; } } sqlScanTest("test",JSON.stringify(payload)); return {requestHeaders: details.requestHeaders}; } }, {urls: ["<all_urls>"]}, ["requestHeaders"]); Sqlmap能用的选项都可以在 http://ip:port/option/taskid/list 里查看,用到哪项写到payload里就行了,最好是做成实时刷新代理,之前写过爬虫的时候写过一个python版的,有空的话会改成js加入到inject.js里.

0x04 参考文献

- 《使用sqlmapapi.py批量化扫描实践》 http://drops.wooyun.org/tips/6653

- 《chrome webRequest API》 https://developer.chrome.com/extensions/webRequest

马上2016了,希望在年前把上个坑填完

也祝各位心想事成

- 本文标签: parse Chrome DDL java 源码 CSS HTML list src rmi 测试 js 数据 App value lib 协议 https UI 域名 XML Connection root git map 代码 json Developer python PHP ip sql URLs 参数 ACE web mina cat GitHub DOM REST http zip db description API Document 插件 ask XEN tab tar 管理

- 版权声明: 本文为互联网转载文章,出处已在文章中说明(部分除外)。如果侵权,请联系本站长删除,谢谢。

- 本文海报: 生成海报一 生成海报二

![[HBLOG]公众号](https://www.liuhaihua.cn/img/qrcode_gzh.jpg)