JVM初探- 使用堆外内存减少Full GC

JVM初探-使用堆外内存减少Full GC

标签 : JVM

问题: 大部分主流互联网企业线上Server JVM选用了CMS收集器(如Taobao、LinkedIn、Vdian), 虽然CMS可与用户线程并发GC以降低STW时间, 但它也并非十分完美, 尤其是当出现 Concurrent Mode Failure 由并行GC转入串行时, 将导致非常长时间的 Stop The World (详细可参考 JVM初探- 内存分配、GC原理与垃圾收集器 ).

解决: 由 GCIH 可以联想到: 将长期存活的对象(如Local Cache)移入堆外内存(off-heap, 又名 直接内存/direct-memory ) , 从而减少CMS管理的对象数量, 以降低Full GC的次数和频率, 达到提高系统响应速度的目的.

引入

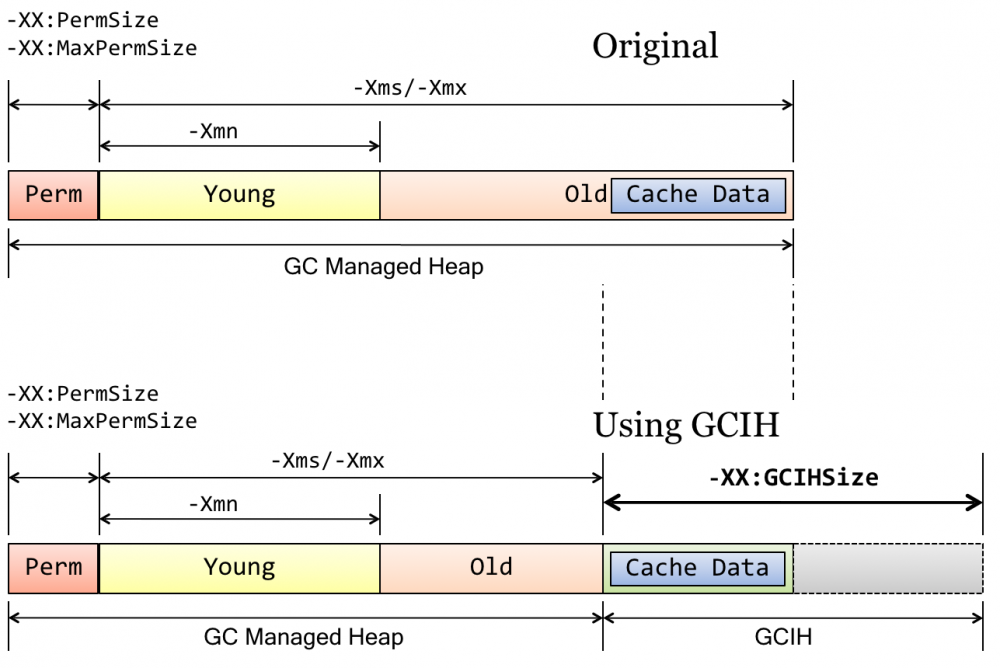

这个idea最初来源于TaobaoJVM对OpenJDK定制开发的GCIH部分(详见 撒迦 的分享- JVM定制改进@淘宝 ), 其中GCIH就是将CMS Old Heap区的一部分划分出来, 这部分内存虽然还在堆内, 但已不被GC所管理. 将长生命周期Java对象放在Java堆外, GC不能管理GCIH内Java对象(GC Invisible Heap) :

(图片来源: JVM@Taobao PPT)

-

这样做有两方面的好处:

- 减少GC管理内存:

由于GCIH会从Old区 “切出” 一块, 因此导致GC管理区域变小, 可以明显降低GC工作量, 提高GC效率, 降低Full GC STW时间(且由于这部分内存仍属于堆, 因此其访问方式/速度不变- 不必付出序列化/反序列化的开销 ). - GCIH内容进程间共享:

由于这部分区域不再是JVM运行时数据的一部分, 因此GCIH内的对象可供对个JVM实例所共享(如一台Server跑多个MR-Job可共享同一份Cache数据), 这样一台Server也就可以跑更多的VM实例.

- 减少GC管理内存:

(实际测试数据/图示可下载 撒迦 分享 PPT ).

但是大部分的互联公司不能像阿里这样可以有专门的工程师针对自己的业务特点定制JVM, 因此我们只能”眼馋”GCIH带来的性能提升却无法”享用”. 但通用的JVM开放了接口可直接向操作系统申请堆外内存( ByteBuffer or Unsafe ), 而这部分内存也是GC所顾及不到的, 因此我们可用JVM堆外内存来模拟GCIH的功能(但相比GCIH不足的是需要付出serialize/deserialize的开销).

JVM堆外内存

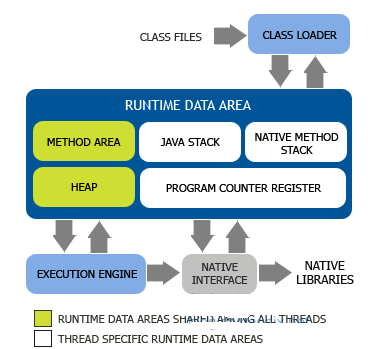

在JVM初探 -JVM内存模型一文中介绍的 Java运行时数据区域 中是找不到堆外内存区域的:

因为它并不是JVM运行时数据区的一部分, 也不是Java虚拟机规范中定义的内存区域, 这部分内存区域直接被操作系统管理.

在JDK 1.4以前, 对这部分内存访问没有光明正大的做法: 只能通过反射拿到 Unsafe 类, 然后调用 allocateMemory()/freeMemory() 来申请/释放这块内存. 1.4开始新加入了NIO, 它引入了一种基于Channel与Buffer的I/O方式, 可以使用Native函数库直接分配堆外内存, 然后通过一个存储在Java堆里面的 DirectByteBuffer 对象作为这块内存的引用进行操作, ByteBuffer 提供了如下常用方法来跟堆外内存打交道:

| API | 描述 |

|---|---|

static ByteBuffer allocateDirect(int capacity) |

Allocates a new direct byte buffer. |

ByteBuffer put(byte b) |

Relative put method (optional operation). |

ByteBuffer put(byte[] src) |

Relative bulk put method (optional operation). |

ByteBuffer putXxx(Xxx value) |

Relative put method for writing a Char/Double/Float/Int/Long/Short value (optional operation). |

ByteBuffer get(byte[] dst) |

Relative bulk get method. |

Xxx getXxx() |

Relative get method for reading a Char/Double/Float/Int/Long/Short value. |

XxxBuffer asXxxBuffer() |

Creates a view of this byte buffer as a Char/Double/Float/Int/Long/Short buffer. |

ByteBuffer asReadOnlyBuffer() |

Creates a new, read-only byte buffer that shares this buffer’s content. |

boolean isDirect() |

Tells whether or not this byte buffer is direct. |

ByteBuffer duplicate() |

Creates a new byte buffer that shares this buffer’s content. |

下面我们就用通用的JDK API来使用堆外内存来实现一个 local cache .

示例1.: 使用JDK API实现堆外Cache

注: 主要逻辑都集中在方法 invoke() 内, 而 AbstractAppInvoker 是一个自定义的性能测试框架, 在后面会有详细的介绍.

/**

* @author jifang

* @since 2016/12/31 下午6:05.

*/

public class DirectByteBufferApp extends AbstractAppInvoker {

@Test

@Override

public void invoke(Object... param) {

Map<String, FeedDO> map = createInHeapMap(SIZE);

// move in off-heap

byte[] bytes = serializer.serialize(map);

ByteBuffer buffer = ByteBuffer.allocateDirect(bytes.length);

buffer.put(bytes);

buffer.flip();

// for gc

map = null;

bytes = null;

System.out.println("write down");

// move out from off-heap

byte[] offHeapBytes = new byte[buffer.limit()];

buffer.get(offHeapBytes);

Map<String, FeedDO> deserMap = serializer.deserialize(offHeapBytes);

for (int i = 0; i < SIZE; ++i) {

String key = "key-" + i;

FeedDO feedDO = deserMap.get(key);

checkValid(feedDO);

if (i % 10000 == 0) {

System.out.println("read " + i);

}

}

free(buffer);

}

private Map<String, FeedDO> createInHeapMap(int size) {

long createTime = System.currentTimeMillis();

Map<String, FeedDO> map = new ConcurrentHashMap<>(size);

for (int i = 0; i < size; ++i) {

String key = "key-" + i;

FeedDO value = createFeed(i, key, createTime);

map.put(key, value);

}

return map;

}

}

由JDK提供的堆外内存访问API只能申请到一个类似一维数组的 ByteBuffer , JDK并未提供基于堆外内存的实用数据结构实现(如堆外的 Map 、 Set ), 因此想要实现Cache的功能只能在 write() 时先将数据 put() 到一个堆内的 HashMap , 然后再将整个 Map 序列化后 MoveIn 到 DirectMemory , 取缓存则反之. 由于需要在堆内申请 HashMap , 因此可能会导致多次Full GC. 这种方式虽然可以使用堆外内存, 但性能不高、无法发挥堆外内存的优势.

幸运的是开源界的前辈开发了诸如 Ehcache 、 MapDB 、 Chronicle Map 等一系列优秀的堆外内存框架, 使我们可以在使用简洁API访问堆外内存的同时又不损耗额外的性能.

其中又以Ehcache最为强大, 其提供了in-heap、off-heap、on-disk、cluster四级缓存, 且Ehcache企业级产品( BigMemory Max / BigMemory Go )实现的BigMemory也是Java堆外内存领域的先驱.

示例2: MapDB API实现堆外Cache

public class MapDBApp extends AbstractAppInvoker {

private static HTreeMap<String, FeedDO> mapDBCache;

static {

mapDBCache = DBMaker.hashMapSegmentedMemoryDirect()

.expireMaxSize(SIZE)

.make();

}

@Test

@Override

public void invoke(Object... param) {

for (int i = 0; i < SIZE; ++i) {

String key = "key-" + i;

FeedDO feed = createFeed(i, key, System.currentTimeMillis());

mapDBCache.put(key, feed);

}

System.out.println("write down");

for (int i = 0; i < SIZE; ++i) {

String key = "key-" + i;

FeedDO feedDO = mapDBCache.get(key);

checkValid(feedDO);

if (i % 10000 == 0) {

System.out.println("read " + i);

}

}

}

}

结果 & 分析

- DirectByteBufferApp

S0 S1 E O P YGC YGCT FGC FGCT GCT 0.00 0.00 5.22 78.57 59.85 19 2.902 13 7.251 10.153

- the last one jstat of MapDBApp

S0 S1 E O P YGC YGCT FGC FGCT GCT 0.00 0.03 8.02 0.38 44.46 171 0.238 0 0.000 0.238

运行 DirectByteBufferApp.invoke() 会发现有看到很多Full GC的产生, 这是因为HashMap需要一个很大的连续数组, Old区很快就会被占满, 因此也就导致频繁Full GC的产生.

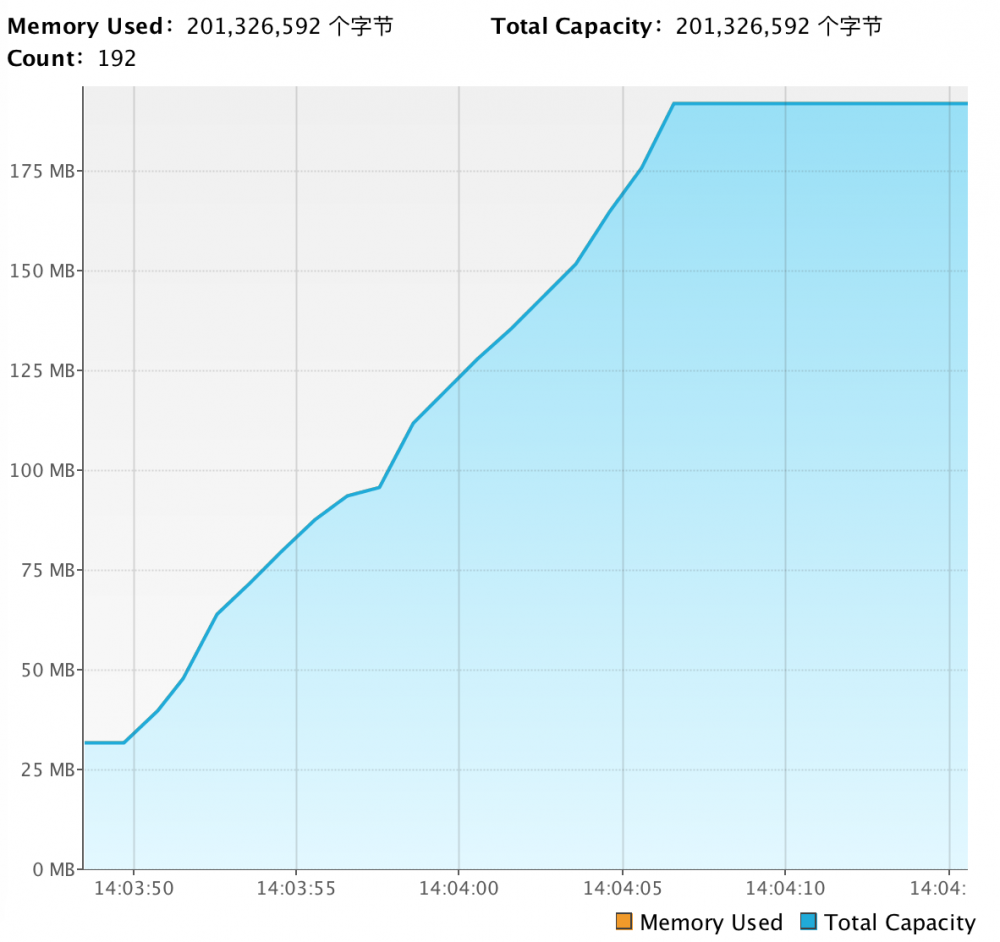

而运行 MapDBApp.invoke() 可以看到有一个 DirectMemory 持续增长的过程, 但FullGC却一次都没有了.

实验: 使用堆外内存减少Full GC

实验环境

- java -version

java version "1.7.0_79" Java(TM) SE Runtime Environment (build 1.7.0_79-b15) Java HotSpot(TM) 64-Bit Server VM (build 24.79-b02, mixed mode)

- VM Options

-Xmx512M -XX:MaxDirectMemorySize=512M -XX:+PrintGC -XX:+UseConcMarkSweepGC -XX:+CMSClassUnloadingEnabled -XX:CMSInitiatingOccupancyFraction=80 -XX:+UseCMSInitiatingOccupancyOnly

-

实验数据

170W条动态(FeedDO).

实验代码

第1组: in-heap、affect by GC、no serialize

- ConcurrentHashMapApp

public class ConcurrentHashMapApp extends AbstractAppInvoker {

private static final Map<String, FeedDO> cache = new ConcurrentHashMap<>();

@Test

@Override

public void invoke(Object... param) {

// write

for (int i = 0; i < SIZE; ++i) {

String key = String.format("key_%s", i);

FeedDO feedDO = createFeed(i, key, System.currentTimeMillis());

cache.put(key, feedDO);

}

System.out.println("write down");

// read

for (int i = 0; i < SIZE; ++i) {

String key = String.format("key_%s", i);

FeedDO feedDO = cache.get(key);

checkValid(feedDO);

if (i % 10000 == 0) {

System.out.println("read " + i);

}

}

}

}

GuavaCacheApp类似, 详细代码可参考完整项目.

第2组: off-heap、not affect by GC、need serialize

- EhcacheApp

public class EhcacheApp extends AbstractAppInvoker {

private static Cache<String, FeedDO> cache;

static {

ResourcePools resourcePools = ResourcePoolsBuilder.newResourcePoolsBuilder()

.heap(1000, EntryUnit.ENTRIES)

.offheap(480, MemoryUnit.MB)

.build();

CacheConfiguration<String, FeedDO> configuration = CacheConfigurationBuilder

.newCacheConfigurationBuilder(String.class, FeedDO.class, resourcePools)

.build();

cache = CacheManagerBuilder.newCacheManagerBuilder()

.withCache("cacher", configuration)

.build(true)

.getCache("cacher", String.class, FeedDO.class);

}

@Test

@Override

public void invoke(Object... param) {

for (int i = 0; i < SIZE; ++i) {

String key = String.format("key_%s", i);

FeedDO feedDO = createFeed(i, key, System.currentTimeMillis());

cache.put(key, feedDO);

}

System.out.println("write down");

// read

for (int i = 0; i < SIZE; ++i) {

String key = String.format("key_%s", i);

Object o = cache.get(key);

checkValid(o);

if (i % 10000 == 0) {

System.out.println("read " + i);

}

}

}

}

MapDBApp与前同.

第3组: off-process、not affect by GC、serialize、affect by process communication

- LocalRedisApp

public class LocalRedisApp extends AbstractAppInvoker {

private static final Jedis cache = new Jedis("localhost", 6379);

private static final IObjectSerializer serializer = new Hessian2Serializer();

@Test

@Override

public void invoke(Object... param) {

// write

for (int i = 0; i < SIZE; ++i) {

String key = String.format("key_%s", i);

FeedDO feedDO = createFeed(i, key, System.currentTimeMillis());

byte[] value = serializer.serialize(feedDO);

cache.set(key.getBytes(), value);

if (i % 10000 == 0) {

System.out.println("write " + i);

}

}

System.out.println("write down");

// read

for (int i = 0; i < SIZE; ++i) {

String key = String.format("key_%s", i);

byte[] value = cache.get(key.getBytes());

FeedDO feedDO = serializer.deserialize(value);

checkValid(feedDO);

if (i % 10000 == 0) {

System.out.println("read " + i);

}

}

}

}

RemoteRedisApp类似, 详细代码可参考下面完整项目.

实验结果

| * | ConcurrentMap | Guava |

|---|---|---|

| TTC | 32166ms/32s | 47520ms/47s |

| Minor C/T | 31/1.522 | 29/1.312 |

| Full C/T | 24/23.212 | 36/41.751 |

| MapDB | Ehcache | |

| TTC | 40272ms/40s | 30814ms/31s |

| Minor C/T | 511/0.557 | 297/0.430 |

| Full C/T | 0/0.000 | 0/0.000 |

| LocalRedis | NetworkRedis | |

| TTC | 176382ms/176s | 1h+ |

| Minor C/T | 421/0.415 | - |

| Full C/T | 0/0.000 | - |

备注:

- TTC: Total Time Cost 总共耗时

- C/T: Count/Time 次数/耗时(seconds)

结果分析

对比前面几组数据, 可以有如下总结:

- 将长生命周期的大对象(如cache)移出heap可大幅度降低Full GC次数与耗时;

- 使用off-heap存储对象需要付出serialize/deserialize成本;

- 将cache放入分布式缓存需要付出进程间通信/网络通信的成本(UNIX Domain/TCP IP)

附:

off-heap的Ehcache能够跑出比in-heap的HashMap/Guava更好的成绩确实是我始料未及的O(∩_∩)O~, 但确实这些数据和堆内存的搭配导致in-heap的Full GC太多了, 当heap堆开大之后就肯定不是这个结果了. 因此在使用堆外内存降低Full GC前, 可以先考虑是否可以将heap开的更大.

附: 性能测试框架

在main函数启动时, 扫描 com.vdian.se.apps 包下的所有继承了 AbstractAppInvoker 的类, 然后使用 Javassist 为每个类生成一个代理对象: 当 invoke() 方法执行时首先检查他是否标注了 @Test 注解(在此, 我们借用junit定义好了的注解), 并在执行的前后记录方法执行耗时, 并最终对比每个实现类耗时统计.

- 依赖

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-proxy</artifactId>

<version>${commons.proxy.version}</version>

</dependency>

<dependency>

<groupId>org.javassist</groupId>

<artifactId>javassist</artifactId>

<version>${javassist.version}</version>

</dependency>

<dependency>

<groupId>com.caucho</groupId>

<artifactId>hessian</artifactId>

<version>${hessian.version}</version>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>${guava.version}</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>${junit.version}</version>

</dependency>

启动类: OffHeapStarter

/**

* @author jifang

* @since 2017/1/1 上午10:47.

*/

public class OffHeapStarter {

private static final Map<String, Long> STATISTICS_MAP = new HashMap<>();

public static void main(String[] args) throws IOException, IllegalAccessException, InstantiationException {

Set<Class<?>> classes = PackageScanUtil.scanPackage("com.vdian.se.apps");

for (Class<?> clazz : classes) {

AbstractAppInvoker invoker = createProxyInvoker(clazz.newInstance());

invoker.invoke();

//System.gc();

}

System.out.println("********************* statistics **********************");

for (Map.Entry<String, Long> entry : STATISTICS_MAP.entrySet()) {

System.out.println("method [" + entry.getKey() + "] total cost [" + entry.getValue() + "]ms");

}

}

private static AbstractAppInvoker createProxyInvoker(Object invoker) {

ProxyFactory factory = new JavassistProxyFactory();

Class<?> superclass = invoker.getClass().getSuperclass();

Object proxy = factory

.createInterceptorProxy(invoker, new ProfileInterceptor(), new Class[]{superclass});

return (AbstractAppInvoker) proxy;

}

private static class ProfileInterceptor implements Interceptor {

@Override

public Object intercept(Invocation invocation) throws Throwable {

Class<?> clazz = invocation.getProxy().getClass();

Method method = clazz.getMethod(invocation.getMethod().getName(), Object[].class);

Object result = null;

if (method.isAnnotationPresent(Test.class)

&& method.getName().equals("invoke")) {

String methodName = String.format("%s.%s", clazz.getSimpleName(), method.getName());

System.out.println("method [" + methodName + "] start invoke");

long start = System.currentTimeMillis();

result = invocation.proceed();

long cost = System.currentTimeMillis() - start;

System.out.println("method [" + methodName + "] total cost [" + cost + "]ms");

STATISTICS_MAP.put(methodName, cost);

}

return result;

}

}

}

- 包扫描工具: PackageScanUtil

public class PackageScanUtil {

private static final String CLASS_SUFFIX = ".class";

private static final String FILE_PROTOCOL = "file";

public static Set<Class<?>> scanPackage(String packageName) throws IOException {

Set<Class<?>> classes = new HashSet<>();

String packageDir = packageName.replace('.', '/');

Enumeration<URL> packageResources = Thread.currentThread().getContextClassLoader().getResources(packageDir);

while (packageResources.hasMoreElements()) {

URL packageResource = packageResources.nextElement();

String protocol = packageResource.getProtocol();

// 只扫描项目内class

if (FILE_PROTOCOL.equals(protocol)) {

String packageDirPath = URLDecoder.decode(packageResource.getPath(), "UTF-8");

scanProjectPackage(packageName, packageDirPath, classes);

}

}

return classes;

}

private static void scanProjectPackage(String packageName, String packageDirPath, Set<Class<?>> classes) {

File packageDirFile = new File(packageDirPath);

if (packageDirFile.exists() && packageDirFile.isDirectory()) {

File[] subFiles = packageDirFile.listFiles(new FileFilter() {

@Override

public boolean accept(File pathname) {

return pathname.isDirectory() || pathname.getName().endsWith(CLASS_SUFFIX);

}

});

for (File subFile : subFiles) {

if (!subFile.isDirectory()) {

String className = trimClassSuffix(subFile.getName());

String classNameWithPackage = packageName + "." + className;

Class<?> clazz = null;

try {

clazz = Class.forName(classNameWithPackage);

} catch (ClassNotFoundException e) {

// ignore

}

assert clazz != null;

Class<?> superclass = clazz.getSuperclass();

if (superclass == AbstractAppInvoker.class) {

classes.add(clazz);

}

}

}

}

}

// trim .class suffix

private static String trimClassSuffix(String classNameWithSuffix) {

int endIndex = classNameWithSuffix.length() - CLASS_SUFFIX.length();

return classNameWithSuffix.substring(0, endIndex);

}

}

注: 在此仅扫描 项目目录 下的 单层目录 的class文件, 功能更强大的包扫描工具可参考Spring源代码或 Touch源代码中的 PackageScanUtil 类 .

AppInvoker基类: AbstractAppInvoker

提供通用测试参数 & 工具函数.

public abstract class AbstractAppInvoker {

protected static final int SIZE = 170_0000;

protected static final IObjectSerializer serializer = new Hessian2Serializer();

protected static FeedDO createFeed(long id, String userId, long createTime) {

return new FeedDO(id, userId, (int) id, userId + "_" + id, createTime);

}

protected static void free(ByteBuffer byteBuffer) {

if (byteBuffer.isDirect()) {

((DirectBuffer) byteBuffer).cleaner().clean();

}

}

protected static void checkValid(Object obj) {

if (obj == null) {

throw new RuntimeException("cache invalid");

}

}

protected static void sleep(int time, String beforeMsg) {

if (!Strings.isNullOrEmpty(beforeMsg)) {

System.out.println(beforeMsg);

}

try {

Thread.sleep(time);

} catch (InterruptedException ignored) {

// no op

}

}

/**

* 供子类继承 & 外界调用

*

* @param param

*/

public abstract void invoke(Object... param);

}

序列化/反序列化接口与实现

public interface IObjectSerializer {

<T> byte[] serialize(T obj);

<T> T deserialize(byte[] bytes);

}

public class Hessian2Serializer implements IObjectSerializer {

private static final Logger LOGGER = LoggerFactory.getLogger(Hessian2Serializer.class);

@Override

public <T> byte[] serialize(T obj) {

if (obj != null) {

try (ByteArrayOutputStream os = new ByteArrayOutputStream()) {

Hessian2Output out = new Hessian2Output(os);

out.writeObject(obj);

out.close();

return os.toByteArray();

} catch (IOException e) {

LOGGER.error("Hessian serialize error ", e);

throw new CacherException(e);

}

}

return null;

}

@SuppressWarnings("unchecked")

@Override

public <T> T deserialize(byte[] bytes) {

if (bytes != null) {

try (ByteArrayInputStream is = new ByteArrayInputStream(bytes)) {

Hessian2Input in = new Hessian2Input(is);

T obj = (T) in.readObject();

in.close();

return obj;

} catch (IOException e) {

LOGGER.error("Hessian deserialize error ", e);

throw new CacherException(e);

}

}

return null;

}

}

完整项目地址: https://github.com/feiqing/off-heap-tester.git .

GC统计工具

#!/bin/bash

pid=`jps | grep $1 | awk '{print $1}'`

jstat -gcutil ${pid} 400 10000

-

使用

sh jstat-uti.sh ${u-main-class}

附加: 为什么在实验中in-heap cache的Minor GC那么少?

现在我还不能给出一个确切地分析答案, 有的同学说是因为CMS Full GC会连带一次Minor GC, 而用 jstat 会直接计入Full GC, 但查看详细的GC日志也并未发现什么端倪. 希望有了解的同学可以在下面评论区可以给我留言, 再次先感谢了O(∩_∩)O~.

- by

by 攻城师@翡青

- Email: feiqing.zjf@gmail.com

- 博客:攻城师-翡青 - http://blog.csdn.net/zjf280441589

- 微博:攻城师-翡青 - http://weibo.com/u/3319050953

- 本文标签: ORM java db tar UI Google DOM final http Action 分布式 spring remote map 内存模型 App 开发 统计 Job id 目录 tab js cat API CTO 操作系统 src 下载 希望 unix https 产品 管理 TCP 参数 build 互联网企业 IO 测试 git 互联网 NSA 时间 list 企业 ACE NIO 定制 awk 进程 IDE 代码 value 微博 生命 图片 ssl ip 线程 redis GitHub key 开源 cache apache mail grep 数据 SDN 实例 总结 博客

- 版权声明: 本文为互联网转载文章,出处已在文章中说明(部分除外)。如果侵权,请联系本站长删除,谢谢。

- 本文海报: 生成海报一 生成海报二

![[HBLOG]公众号](https://www.liuhaihua.cn/img/qrcode_gzh.jpg)