【Python】如何获取知乎最有价值的内容

一 前言

相信大部分能看到这篇blog的人都听说过知乎吧?如果你没有听说过,那么链接在这里 知乎 作为一个知乎er,为了更加深入的理解“xxx 是一种什么体验”(的图片),为了践行 “技术改变生活”(实则有些wuliao) ,使用requsets 爬取知乎中最优价值的内容,本文本着探索的精神,写一段获取内容的python程序。

二 践行

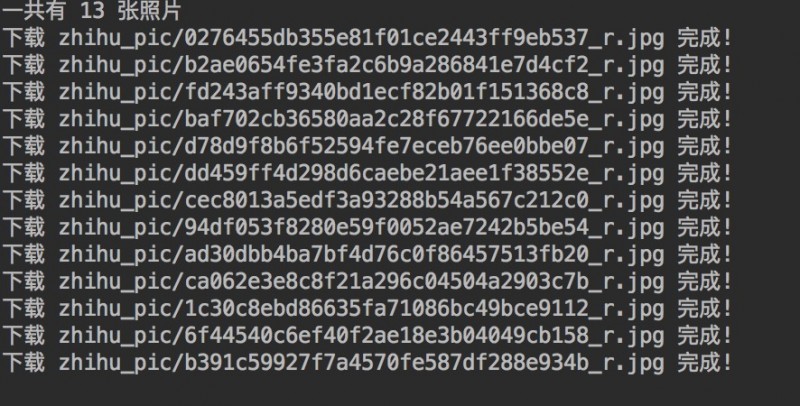

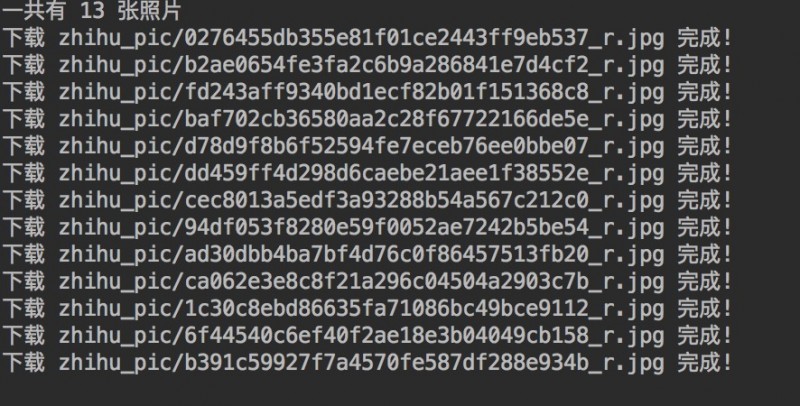

本代码还存在一些不足的地方,无法完全获取全部的图片,需要在兼容 自动点击 ”更多“ 加载更多答案。

代码第二版解决了第一版代码中不能自动加载的问题。

相信大部分能看到这篇blog的人都听说过知乎吧?如果你没有听说过,那么链接在这里 知乎 作为一个知乎er,为了更加深入的理解“xxx 是一种什么体验”(的图片),为了践行 “技术改变生活”(实则有些wuliao) ,使用requsets 爬取知乎中最优价值的内容,本文本着探索的精神,写一段获取内容的python程序。

二 践行

- #!/usr/bin/env python

- #-*- coding:utf-8 -*-

- import re

- import requests

- import os

- from urlparse import urlsplit

- from os.path import basename

- def getHtml(url):

- session = requests.Session()

- # 模拟浏览器访问

- header = {

- 'User-Agent': "Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36",

- 'Accept-Encoding': 'gzip, deflate'}

- res = session.get(url, headers=header)

- if res.status_code == 200:

- content = res.content

- else:

- content = ''

- return content

-

- def mkdir(path):

- if not os.path.exists(path):

- print '新建文件夹:', path

- os.makedirs(path)

- return True

- else:

- print u"图片存放于:", os.getcwd() + os.sep + path

- return False

-

- def download_pic(img_lists, dir_name):

- print "一共有 {num} 张照片".format(num=len(img_lists))

- for image_url in img_lists:

- response = requests.get(image_url, stream=True)

- if response.status_code == 200:

- image = response.content

- else:

- continue

- file_name = dir_name + os.sep + basename(urlsplit(image_url)[2])

- try:

- with open(file_name, "wb") as picture:

- picture.write(image)

- except IOError:

- print("IO Error/n")

- return

- finally:

- picture.close

- print "下载 {pic_name} 完成!".format(pic_name=file_name)

-

- def getAllImg(html):

- # 利用正则表达式把源代码中的图片地址过滤出来

- #reg = r'data-actualsrc="(.*?)">'

- reg = r'https://pic/d.zhimg.com/[a-fA-F0-9]{5,32}_/w+.jpg'

- imgre = re.compile(reg, re.S)

- tmp_list = imgre.findall(html) # 表示在整个网页中过滤出所有图片的地址,放在imglist中

- # 清理掉头像和去重 获取data-original的内容

- tmp_list = list(set(tmp_list)) # 去重

- imglist = []

- for item in tmp_list:

- if item.endswith('r.jpg'):

- img_list.append(item)

- print 'num : %d' % (len(imglist))

- return imglist

-

-

- if __name__ == '__main__':

- question_id = 35990613

- zhihu_url = "https://www.zhihu.com/question/{qid}".format(qid=question_id)

- html_content = getHtml(zhihu_url)

- path = 'zhihu_pic'

- mkdir(path) # 创建本地文件夹

- img_list = getAllImg(html_content) # 获取图片的地址列表

- download_pic(img_list, path) # 保存图片

本代码还存在一些不足的地方,无法完全获取全部的图片,需要在兼容 自动点击 ”更多“ 加载更多答案。

代码第二版解决了第一版代码中不能自动加载的问题。

- #!/usr/bin/env python

- #-*- coding:utf-8 -*-

- import re

- import requests

- import os

- from urlparse import urlsplit

- from os.path import basename

-

- headers = {

- 'User-Agent': "Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36",

- 'Accept-Encoding': 'gzip, deflate'}

-

-

- def mkdir(path):

- if not os.path.exists(path):

- print '新建文件夹:', path

- os.makedirs(path)

- return True

- else:

- print u"图片存放于:", os.getcwd() + os.sep + path

- return False

-

-

- def download_pic(img_lists, dir_name):

- print "一共有 {num} 张照片".format(num=len(img_lists))

- for image_url in img_lists:

- response = requests.get(image_url, stream=True)

- if response.status_code == 200:

- image = response.content

- else:

- continue

- file_name = dir_name + os.sep + basename(urlsplit(image_url)[2])

- try:

- with open(file_name, "wb") as picture:

- picture.write(image)

- except IOError:

- print("IO Error/n")

- continue

- finally:

- picture.close

- print "下载 {pic_name} 完成!".format(pic_name=file_name)

-

-

- def get_image_url(qid, headers):

- # 利用正则表达式把源代码中的图片地址过滤出来

- #reg = r'data-actualsrc="(.*?)">'

- tmp_url = "https://www.zhihu.com/node/QuestionAnswerListV2"

- size = 10

- image_urls = []

- session = requests.Session()

- # 利用循环自动完成需要点击 “更多” 获取所有答案,每个分页作为一个answer集合。

- while True:

- postdata = {'method': 'next', 'params': '{"url_token":' +

- str(qid) + ',"pagesize": "10",' + '"offset":' + str(size) + "}"}

- page = session.post(tmp_url, headers=headers, data=postdata)

- ret = eval(page.text)

- answers = ret['msg']

- size += 10

- if not answers:

- print "图片URL获取完毕, 页数: ", (size - 10) / 10

- return image_urls

- #reg = r'https://pic/d.zhimg.com/[a-fA-F0-9]{5,32}_/w+.jpg'

- imgreg = re.compile('data-original="(.*?)"', re.S)

- for answer in answers:

- tmp_list = []

- url_items = re.findall(imgreg, answer)

- for item in url_items: # 这里去掉得到的图片URL中的转义字符'//'

- image_url = item.replace("//", "")

- tmp_list.append(image_url)

- # 清理掉头像和去重 获取data-original的内容

- tmp_list = list(set(tmp_list)) # 去重

- for item in tmp_list:

- if item.endswith('r.jpg'):

- print item

- image_urls.append(item)

- print 'size: %d, num : %d' % (size, len(image_urls))

-

-

- if __name__ == '__main__':

- question_id = 26037846

- zhihu_url = "https://www.zhihu.com/question/{qid}".format(qid=question_id)

- path = 'zhihu_pic'

- mkdir(path) # 创建本地文件夹

- img_list = get_image_url(question_id, headers) # 获取图片的地址列表

- download_pic(img_list, path) # 保存图片

正文到此结束

热门推荐

相关文章

Loading...

![[HBLOG]公众号](https://www.liuhaihua.cn/img/qrcode_gzh.jpg)