dubbo2.5-spring4-mybastis3.2-springmvc4-logback-ELK整合(十三) logback+ELK日志收集服务器搭建

好久没有更新博客文章了,今天给大家带来的是logback+ELK+SpringMVC 日志收集服务器搭建。接下来我会介绍ELK是什么?logback是什么?以及搭建的流程。

1.ELK是什么?

ELK是由Elasticsearch、Logstash、Kibana这3个软件的缩写。

- Elasticsearch是一个分布式搜索分析引擎,稳定、可水平扩展、易于管理是它的主要设计初衷

- Logstash是一个灵活的数据收集、加工和传输的管道软件

- Kibana是一个数据可视化平台,可以通过将数据转化为酷炫而强大的图像而实现与数据的交互将三者的收集加工,存储分析和可视转化整合在一起就形成了 ELK 。

2.ELK流程

ELK的流程应该是这样的:Logback->Logstash->(Elasticsearch<->Kibana)

- 应用程序产生出日志,由logback日志框架进行处理。

- 将日志数据输出到Logstash中

- Logstash再将数据输出到Elasticsearch中

- Elasticsearch再与Kibana相结合展示给用户

3.ELK官网

https://www.elastic.co/guide/index.html

4. 环境配置

4.1 基础环境

- jdk 1.8

- Centos 7.0 X86-64

注意:ELK服务不能在root用户开启。需要重新创建用户。

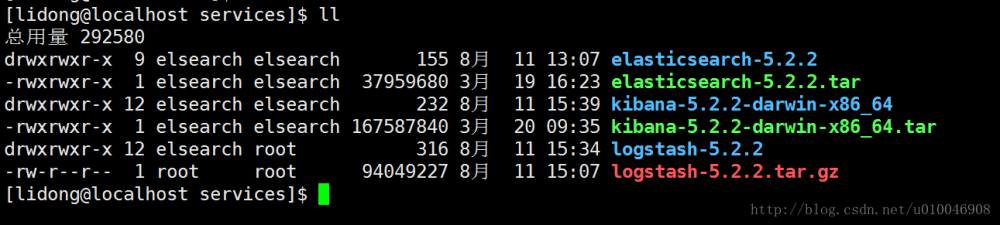

下载ELK相关服务压缩包

创建ELK用户和目录并赋予权限,方便统一管理。

[root@localhost /]# mkdir elsearch [root@localhost /]# groupadd elsearch [root@localhost /]# useradd -g elsearch elsearch [root@localhost /]# chown -R elsearch:elsearch /elsearch [root@localhost /]# su elsearch [elk@localhost /]$ cd elsearch

4.2 下载,然你也可以去官网找最新版的

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-5.2.2.tar.gz

wget https://artifacts.elastic.co/downloads/logstash/logstash-5.22.tar.gz

wget https://artifacts.elastic.co/downloads/kibana/kibana-5.2.2-linux-x86_64.tar.gz

我这里是以5.2.2为例来实现。

4.3 配置Elasticsearch

Elasticsearch是可以搭建集群,我这边只是解压后直接修改配置文件

elasticsearch.yml

cluster.name: es_cluster_1 node.name: node-1 path.data: /usr/local/services/elasticsearch-5.2.2/data path.logs:/usr/local/services/elasticsearch-5.2.2/logs network.host: 192.168.10.200 http.port: 9200

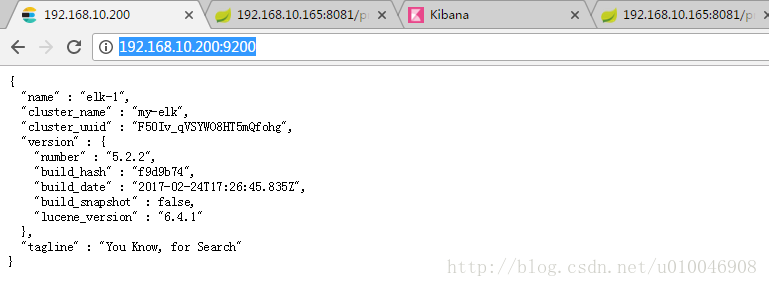

启动ElasticSearch,访问 http://192.168.10.200:9200/

看到如上的界面就代表启动成功。

注意:安装过程中出现一些问题。在这篇文章中已经都给我们解决了。

http://www.cnblogs.com/sloveling/p/elasticsearch.html4.4 配置logstash

解压

tar -zxvf /usr/local/services/logstash-5.2.2.tar.gz

测试配置,只是测试服务是否启动。还有这个文件是没有的,启动时加上这个路径就是以这个配置启动

vi /usr/local/services/logstash-5.2.2/config/logstash.conf

input {

stdin { }

}

output {

stdout {

codec => rubydebug {}

}

}

logstash以配置文件方式启动有两种:

- 列表内容 logstash -f logstash-test.conf //普通方式启动

- logstash agent -f logstash-test.conf –debug//开启debug模式

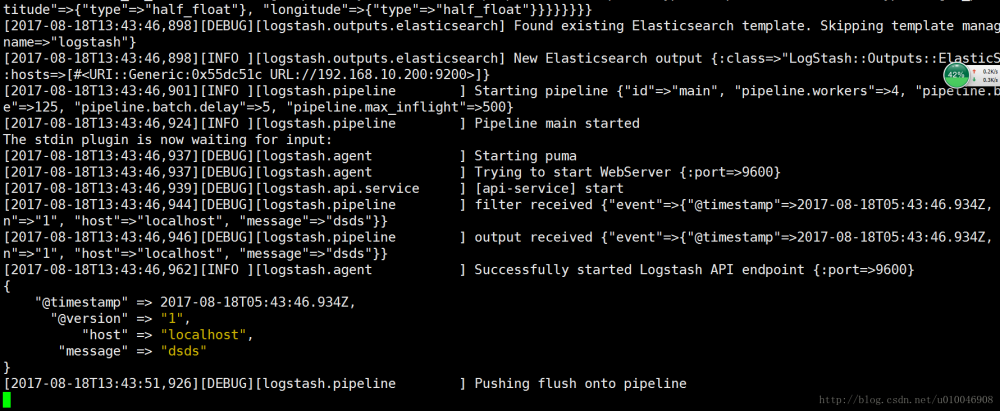

./bin/logstash -f config/logstash.conf --debug

启动成功会看到如下的结果:

这是时候,我们在控制台随便输入都可以被收集

n"=>"1", "host"=>"localhost", "message"=>"我们都是好好"}}

{

"@timestamp" => 2017-08-18T05:45:25.340Z,

"@version" => "1",

"host" => "localhost",

"message" => "我们都是好好"

}

[2017-08-18T13:45:26,933][DEBUG][logstash.pipeline ] Pushing flush onto pipeline

[2017-08-18T13:45:31,934][DEBUG][logstash.pipeline ] Pushing flush onto pipeline

4.5 配置logstash

配置kibana

+ 解压

[elsearch@localhost root]$ tar -zxvf /usr/local/services/kibana-5.2.2-linux-x86_64.tar.gz

打开配置

[elsearch@localhost root]$ vim /usr/local/services/kibana-5.2.2-linux-x86_64/config/kibana.yml

修改配置,最后最加

server.port: 8888 server.host: "192.168.10.200" elasticsearch.url: "http://192.168.10.200:9200"

启动

[elsearch@localhost root]$ /usr/local/services/kibana-5.2.2-linux-x86_64/bin/kibana &

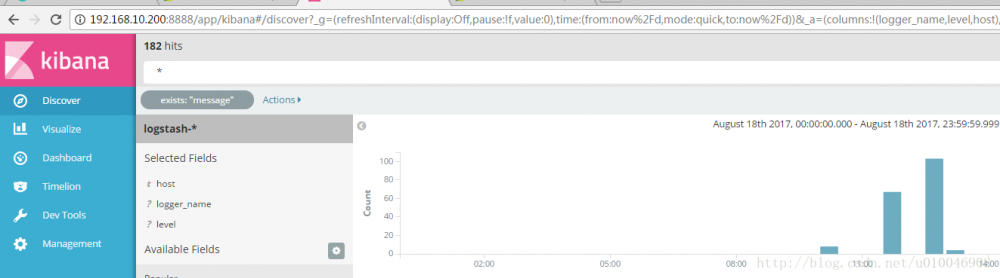

访问地址 http://192.168.10.200:8888

基本ELK的环境的搭建就ok了,我们接下来学习logback-ELK整合来收集JavaEE中的日志。

4.6 logback-ELK整合

4.6.1 本案列采用maven管理

pom.xml

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>4.11</version>

</dependency>

<!--实现slf4j接口并整合-->

<dependency>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

<version>1.2.3</version>

</dependency>

<dependency>

<groupId>net.logstash.log4j</groupId>

<artifactId>jsonevent-layout</artifactId>

<version>1.7</version>

</dependency>

4.6.2配置logaback的配置文件

<?xml version="1.0" encoding="UTF-8"?>

<configuration debug="false">

<!--定义日志文件的存储地址 勿在 LogBack 的配置中使用相对路径-->

<property name="LOG_HOME" value="E:/logs" />

<!-- 控制台输出 -->

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<!--格式化输出:%d表示日期,%thread表示线程名,%-5level:级别从左显示5个字符宽度%msg:日志消息,%n是换行符-->

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg%n</pattern>

</encoder>

</appender>

<!-- 按照每天生成日志文件 -->

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<!--日志文件输出的文件名-->

<FileNamePattern>${LOG_HOME}/TestWeb.log_%d{yyyy-MM-dd}.log</FileNamePattern>

<!--日志文件保留天数-->

<MaxHistory>30</MaxHistory>

</rollingPolicy>

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<!--格式化输出:%d表示日期,%thread表示线程名,%-5level:级别从左显示5个字符宽度%msg:日志消息,%n是换行符-->

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg%n</pattern>

</encoder>

<!--日志文件最大的大小-->

<triggeringPolicy class="ch.qos.logback.core.rolling.SizeBasedTriggeringPolicy">

<MaxFileSize>10MB</MaxFileSize>

</triggeringPolicy>

</appender>

<!-- show parameters for hibernate sql 专为 Hibernate 定制 -->

<logger name="org.hibernate.type.descriptor.sql.BasicBinder" level="TRACE" />

<logger name="org.hibernate.type.descriptor.sql.BasicExtractor" level="DEBUG" />

<logger name="org.hibernate.SQL" level="DEBUG" />

<logger name="org.hibernate.engine.QueryParameters" level="DEBUG" />

<logger name="org.hibernate.engine.query.HQLQueryPlan" level="DEBUG" />

<!--myibatis log configure-->

<logger name="com.apache.ibatis" level="TRACE"/>

<logger name="java.sql.Connection" level="DEBUG"/>

<logger name="java.sql.Statement" level="DEBUG"/>

<logger name="java.sql.PreparedStatement" level="DEBUG"/>

<appender name="stash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>192.168.10.200:8082</destination>

<!-- encoder is required -->

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder" />

</appender>

<!-- 日志输出级别 -->

<root level="INFO">

<!-- 只有添加stash关联才会被收集-->

<appender-ref ref="stash" />

<appender-ref ref="STDOUT" />

<appender-ref ref="FILE" />

</root>

</configuration>

注意:logstash接收日志的地址 192.168.10.200:8082

4.6.3配置logstash-test.conf

vi logstash-test.conf

input {

tcp {

host => "192.168.10.200"

port => 8082

mode => "server"

ssl_enable => false

codec => json {

charset => "UTF-8"

}

}

}

output {

elasticsearch {

hosts => "192.168.10.200:9200"

index => "logstash-test"

}

stdout { codec => rubydebug {} }

}

启动收集

./bin/logstash -f config/logstash-test.conf –debug

4.6.4配置Controller添加日志输出

package com.example.demo;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class TestEndpoints {

private final static Logger logger = LoggerFactory.getLogger(TestEndpoints.class);

@GetMapping("/product/{id}")

public String getProduct(@PathVariable String id) {

String data = "{/"name/":/"李东/"}";

logger.info(data);

return "product id : " + id;

}

@GetMapping("/order/{id}")

public String getOrder(@PathVariable String id) {

return "order id : " + id;

}

}

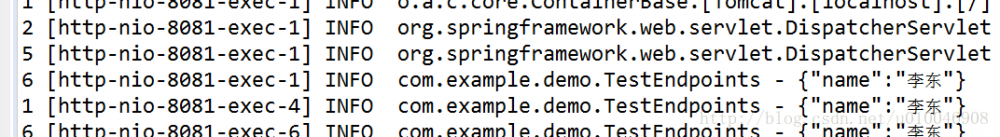

请求调用之后控制台的log

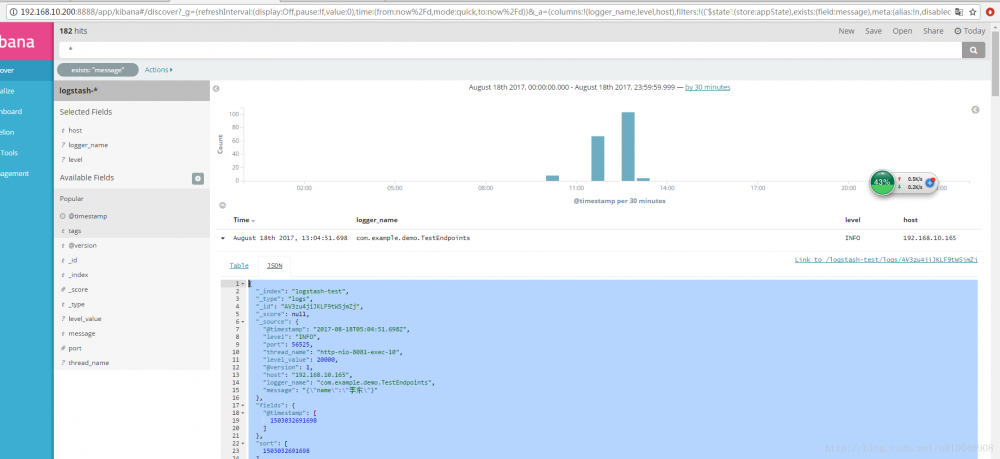

之后Kibana中就可以收集到log

{

"_index": "logstash-test",

"_type": "logs",

"_id": "AV3zu4jiJKLF9tWSjmZj",

"_score": null,

"_source": {

"@timestamp": "2017-08-18T05:04:51.698Z",

"level": "INFO",

"port": 56525,

"thread_name": "http-nio-8081-exec-10",

"level_value": 20000,

"@version": 1,

"host": "192.168.10.165",

"logger_name": "com.example.demo.TestEndpoints",

"message": "{/"name/":/"李东/"}"

},

"fields": {

"@timestamp": [

1503032691698

]

},

"sort": [

1503032691698

]

}

基本上就这些步骤,希望看完之后,动手实践一下,谢谢阅读。

- 本文标签: 文章 iBATIS wget node tar UI HTML dubbo ACE IO bug java 测试 配置 App final NIO Kibana spring 定制 js 安装 CTO REST 博客 Service ELK 目录 ssl https map TCP 软件 http Property Elasticsearch linux 服务器 ip sql SDN SpringMVC core message 分布式 root centos 管理 value src trigger id web pom 集群 IDE XML example 下载 希望 apache maven 线程 数据 json Connection

- 版权声明: 本文为互联网转载文章,出处已在文章中说明(部分除外)。如果侵权,请联系本站长删除,谢谢。

- 本文海报: 生成海报一 生成海报二

![[HBLOG]公众号](https://www.liuhaihua.cn/img/qrcode_gzh.jpg)