Hadoop学习(二) 第一个小练习

使用eclipse编写代码,分析一个text文件中每个单词的出现的次数

eclipse下载地址: http://www.eclipse.org/downloads/packages/release/Luna/SR2

如果出现如下报错:

Java

RunTime Environment (JRE) or Java Development Kit (JDK) must be available in order to run Eclipse.

No java virtual machine was found after searching the following locations:…

意思没有配置JDK的路径,那就配置一下,在终端进入你的eclipse目录,然后输入:

mkdir jre

cd jre

ln -s 你的JDK目录/bin bin 创建jdk的软连接

ln -s /home/chs/java/jdk1.8.0_172/bin

在hadoop创建一个in目录

hadoop fs -mkdir /in

本地创建一个words文件

touch words vim words 输入一些分析的单词 比如输入: hadoop spark word space hdfs hadoop word mapper reduce hello hadoop hello word hai hadoop space total google 保存退出

把words文件传到 in 目录下

hadoop fs -put words /in

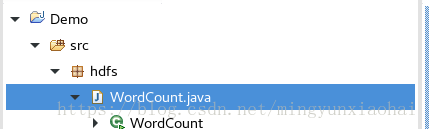

使用eclipse编写代码创建一个类WordCount

导入hadoop的jar包

右键项目 》properties》java Build Path》libraries》Add External JARS》在hadoop解压目录总share文件夹中的hadoop文件夹中。导入所需要的jar包也可都导入

package hdfs;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCount {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

if(args.length!=2){

System.exit(0);

}

//

Configuration con = new Configuration();

@SuppressWarnings("deprecation")

Job job = new Job(con);

job.setJarByClass(WordCount.class);

//设置输入路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

//设置输出路径

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//设置实现map函数的类

job.setMapperClass(MyMap.class);

//设置实现reduce函数的类

job.setReducerClass(MyReduce.class);

//设置map阶段产生的key和value的类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

//设置reduce阶段产生的key和value的类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//提交job

job.waitForCompletion(true);

}

public static class MyMap extends Mapper<LongWritable, Text, Text, IntWritable>{

protected void map(LongWritable key, Text value, org.apache.hadoop.mapreduce.Mapper<LongWritable,Text,Text,IntWritable>.Context context) throws java.io.IOException ,InterruptedException {

//在此处写map代码

String[] lines = value.toString().split(" ");

for (String word : lines) {

context.write(new Text(word), new IntWritable(1));

}

};

}

public static class MyReduce extends Reducer<Text, IntWritable, Text, IntWritable>{

protected void reduce(Text k2, java.lang.Iterable<IntWritable> v2s, org.apache.hadoop.mapreduce.Reducer<Text,IntWritable,Text,IntWritable>.Context context) throws java.io.IOException ,InterruptedException {

//在此处写reduce代码

int count=0;

for (IntWritable cn : v2s) {

count=count+cn.get();

}

context.write(k2, new IntWritable(count));

};

}

}

自己建个文件夹保存jar包

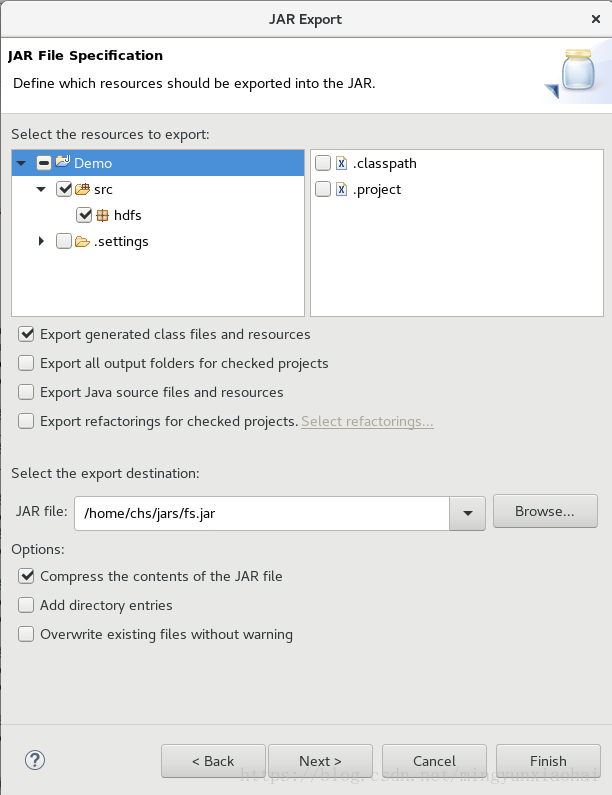

使用eclipse把项目打成jar包

项目上右键选择Export

选择next

这里jar保存的路径的文件夹其他用户组需要有写的权限。然后点击next

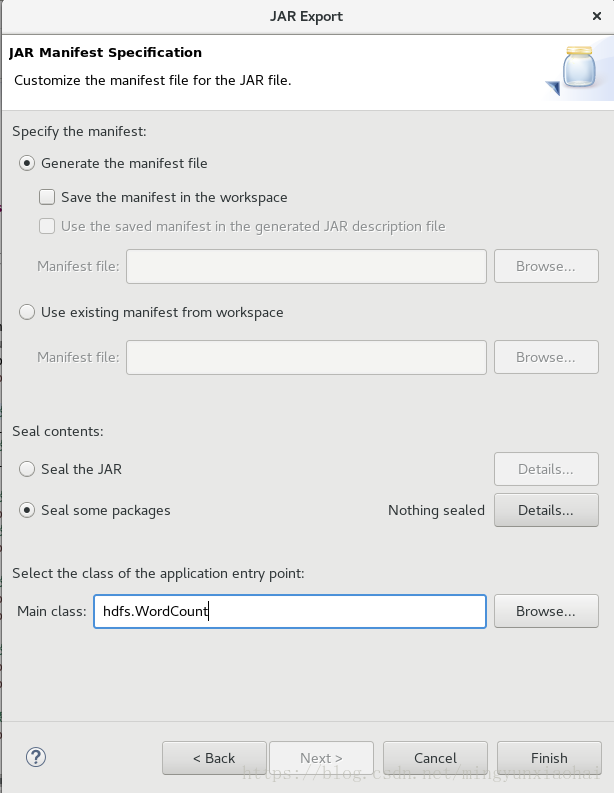

这里选择main函数所在的包名和类名,之后点击完成

执行jar,进入到jar存放的文件夹

hadoop jar fs.jar hdfs.WordCount /in/words /out

hadoop jar: 执行jar 的命令

fs.jar: 打好的jar的名字

hdfs.WordCount: main函数所在的类

/in/words: hdfs上的输入路径

/out: hdfs上的输出路径

执行完后hdfs根路径下会出现一个out目录查看改目录

hadoop fs -ls /out

可以看到

-rw-r--r-- 1 root supergroup 0 2018-05-14 14:08 /out/_SUCCESS -rw-r--r-- 1 root supergroup 88 2018-05-14 14:08 /out/part-r-00000

查看part-r-00000

hadoop fs -cat /out/part-r-00000

可以看到

google 1 hadoop 4 hai 1 hdfs 1 hello 2 mapper 1 reduce 1 space 2 spark 1 total 1 word 3

每个词有多少就分析出来了。

![[HBLOG]公众号](https://www.liuhaihua.cn/img/qrcode_gzh.jpg)