架构师修炼之微服务部署 - 容器与集群编排

背景

通过前几章节,我们知道:

- docker build 可以创建一个自定义镜像;

- docker run 可以启动一个容器;

而实际项目中,特别是微服务化之后,运维需要面对的不单单是一个镜像一个容器,而是几十乃至上千。如果通过手工敲命令去创建一个个容器,不科学也太慢。如果遇到机器更新换代或者重启,又得重新敲一遍,这样下去我想迟早脑袋头发都要掉光。

还好docker提前想到了这点,为我们准备了相应的工具:

- 容器编排: docker compose

- 集群编排: swarm mode

还有一个很时尚的工具 Kubernetes ,它是Google根据自身十几年经验打造的。

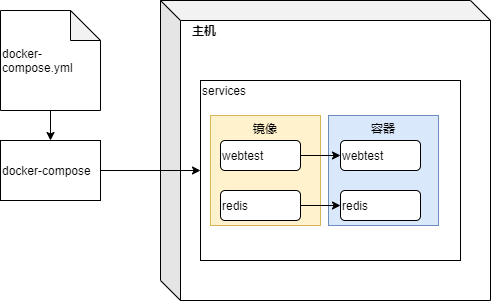

容器编排:docker compose

Compose 是由Python 编写,定义和运行多个 Docker 容器的工具。通过一个 docker-compose.yml 模板文件(YAML 格式)来定义应用服务,简单的命令批量创建和启动定义的所有服务。

重要概念:

- 服务 (service):一个应用容器,实际上可以运行多个相同镜像的实例。

- 项目 (project):由一组关联的应用容器组成的一个完整业务单元。

一个项目可以由多个服务(容器)关联而成,Compose 面向项目进行管理。

特性:

-

使用不同的项目名称可以在一个主机构建不同组应用环境。比如开发主机上的不同项目。

默认项目名称为yml文件所在目录名称,通过使用-p projectname 可以自定义项目名称。创建的镜像和容器名称前缀会带上项目名称。

-

保存已经创建容器里头挂载的数据。运行 docker-compose up 的时候,如果监测到有运行的容器,它会自动把就容器挂载的数据复制到新的容器。

-

Compose会缓存创建容器的配置,重启service时只会重建有更改的容器。

-

yml模板文件支持可变参数,使用参数可以创建不同的应用。

db:

image: "postgres:${POSTGRES_VERSION}"

YML 模板文件常用配置:

-

version:compose文件格式版本号,最新版为3。

Compose file format Docker Engine release 3.8 19.03.0+ 3.7 18.06.0+ 3.6 18.02.0+ 3.5 17.12.0+ 3.4 17.09.0+ 3.3 17.06.0+ 3.2 17.04.0+ 3.1 1.13.1+ 3.0 1.13.0+ 2.4 17.12.0+ 2.3 17.06.0+ 2.2 1.13.0+ 2.1 1.12.0+ 2.0 1.10.0+ 1.0 1.9.1.+ -

services:服务父节点。

-

通过镜像创建容器的docker-compose.yml文件

version: "3" services: #容器唯一标识 webapp: #镜像名称 image: examples/web ports: - "80:80" volumes: - "/data" -

通过DockerFile编译创建容器的docker-compose.yml文件

version: '3' services: #容器唯一标识 webapp: build: #DockerFile文件地址 context: ./dir #DockerFile文件名称 dockerfile: Dockerfile-alternate

安装:

- Mac/Windows安装:Docker Desktop for Mac/Windows 自带 docker-compose 二进制文件,安装 Docker 之后可以直接使用。

- Linux安装:

$ sudo curl -L https://github.com/docker/compose/releases/download/1.25.5/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose $ sudo chmod +x /usr/local/bin/docker-compose

常见命令:

- up: 创建并启动配置的所有容器

- down: 停止并删除容器、镜像、网络、存储盘

- ps:列出服务(service)所有的容器

D:/docker/03-compose>docker-compose ps Name Command State Ports --------------------------------------------------------------------- 03-compose_nbaspnetcore_1 dotnet aspnetcoreapp.dll Exit 0 03-compose_nbginx_1 /bin/sh -c /bin/bash Exit 0 - images:列出服务(service)所有的镜像

D:/docker/03-compose>docker-compose images Container Repository Tag Image Id Size -------------------------------------------------------------------------------------- 03-compose_nbaspnetcore_1 03-compose_nbaspnetcore latest 73ae1ad12839 211.9 MB 03-compose_nbginx_1 03-compose_nbginx latest 61ed0bb949f6 403.8 MB - rm:删除停止的容器

D:/docker/03-compose>docker-compose ps Name Command State Ports ----------------------------------------------------------------------------------------------------------- 03-compose_nbaspnetcore_1 dotnet aspnetcoreapp.dll Up 0.0.0.0:6067->443/tcp, 0.0.0.0:6066->80/tcp 03-compose_nbginx_1 /bin/sh -c /bin/bash Exit 0 D:/docker/03-compose>docker-compose rm Going to remove 03-compose_nbginx_1 Are you sure? [yN] y Removing 03-compose_nbginx_1 ... done D:/docker/03-compose>docker-compose ps Name Command State Ports ---------------------------------------------------------------------------------------------------------- 03-compose_nbaspnetcore_1 dotnet aspnetcoreapp.dll Up 0.0.0.0:6067->443/tcp, 0.0.0.0:6066->80/tcp - build:编译或者再编译服务

- create: 创建服务

- start:开启服务

- stop:停止服务

- restart:重启服务

实例

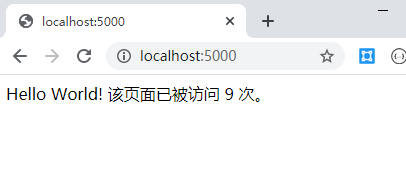

下面用 Python 来建立一个能够记录页面访问次数的 web 网站。

-

新建composetest文件夹,在该目录中编写 app.py 文件。

import time import redis from flask import Flask app = Flask(__name__) cache = redis.Redis(host='redis', port=6379) def get_hit_count(): retries = 5 while True: try: return cache.incr('hits') except redis.exceptions.ConnectionError as exc: if retries == 0: raise exc retries -= 1 time.sleep(0.5) @app.route('/') def hello(): count = get_hit_count() return 'Hello World! 该页面已被访问 {} 次。/n'.format(count) -

在文件夹中,添加 Dockerfile 文件

FROM python:3.7-alpine WORKDIR /code ENV FLASK_APP app.py ENV FLASK_RUN_HOST 0.0.0.0 RUN apk add --no-cache gcc musl-dev linux-headers RUN pip install redis flask COPY . . CMD ["flask", "run"]

- 通过Python 3.7 基础镜像创建.

- 设置工作目录为 /code.

- 设置FLask命令的环境变量.

- 安装gcc 加速编译MarkupSafe与SQLAlchemy.

- 安装python依赖的包.

- 复制当前目录内容到镜像目录.

- 设置容器默认启动命令flask run.

-

在文件夹外部创建 docker-compose.yml 文件

version: '3' services: webtest: build: ./composetest ports: - 5000:5000 redis: image: redis:alpine- 创建一个web容器,开放5000端口.

- 从Docker Hub拉取一个Redis镜像创建容器.

-

运行Compose项目。(项目名称为plana)

D:/docker/03-compose>docker-compose -p plana up Creating network "plana_default" with the default driver Building webtest Step 1/8 : FROM python:3.7-alpine ---> 16f919b9ecd5 Step 2/8 : WORKDIR /code ---> Using cache ---> 3cd944ab4513 Step 3/8 : ENV FLASK_APP app.py ---> Using cache ---> 2aa9917f4c9f Step 4/8 : ENV FLASK_RUN_HOST 0.0.0.0 ---> Using cache ---> db1028a91223 Step 5/8 : RUN apk add --no-cache gcc musl-dev linux-headers ---> Using cache ---> 13a0ed97a7dd Step 6/8 : RUN pip install redis flask ---> Using cache ---> 1807263196e2 Step 7/8 : COPY . . ---> Using cache ---> 553b6c68eace Step 8/8 : CMD ["flask", "run"] ---> Using cache ---> ad92e1924ad5 Successfully built ad92e1924ad5 Successfully tagged plana_webtest:latest WARNING: Image for service webtest was built because it did not already exist. To rebuild this image you must use `docker-compose build` or `docker-compose up --build`. Creating plana_redis_1 ... done Creating plana_webtest_1 ... done Attaching to plana_webtest_1, plana_redis_1 redis_1 | 1:C 29 Apr 2020 12:59:36.795 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo redis_1 | 1:C 29 Apr 2020 12:59:36.795 # Redis version=5.0.9, bits=64, commit=00000000, modified=0, pid=1, just started redis_1 | 1:C 29 Apr 2020 12:59:36.795 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf redis_1 | 1:M 29 Apr 2020 12:59:36.800 * Running mode=standalone, port=6379. redis_1 | 1:M 29 Apr 2020 12:59:36.800 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128. redis_1 | 1:M 29 Apr 2020 12:59:36.800 # Server initialized redis_1 | 1:M 29 Apr 2020 12:59:36.801 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled. redis_1 | 1:M 29 Apr 2020 12:59:36.802 * Ready to accept connections webtest_1 | * Serving Flask app "app.py" webtest_1 | * Environment: production webtest_1 | WARNING: This is a development server. Do not use it in a production deployment. webtest_1 | Use a production WSGI server instead. webtest_1 | * Debug mode: off webtest_1 | * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

-

执行结果。(需要指定项目名称)

D:/docker/03-compose>docker-compose ps Name Command State Ports ------------------------------ D:/docker/03-compose>docker-compose images Container Repository Tag Image Id Size ---------------------------------------------- D:/docker/03-compose>docker-compose -p plana images Container Repository Tag Image Id Size ------------------------------------------------------------------ plana_redis_1 redis alpine 3661c84ee9d0 29.8 MB plana_webtest_1 plana_webtest latest ad92e1924ad5 219.7 MB D:/docker/03-compose>docker-compose -p plana ps Name Command State Ports --------------------------------------------------------------------------------- plana_redis_1 docker-entrypoint.sh redis ... Up 6379/tcp plana_webtest_1 flask run Up 0.0.0.0:5000->5000/tcp

集群编排:swarm mode

Swarm 是使用 SwarmKit 构建的 Docker 引擎内置(原生)的集群管理和编排工具。

Docker 1.12开始 Swarm mode 已经内嵌入 Docker 引擎,成为了 docker 子命令 docker swarm 。

Swarm mode 内置 kv 存储功能,提供了众多的新特性,使得 Docker 原生的 Swarm 集群具备与 Mesos、Kubernetes 竞争的实力。比如:

- 具有容错能力的去中心化设计

- 内置服务发现

- 负载均衡

- 路由网格

- 动态伸缩

- 滚动更新

- 安全传输等

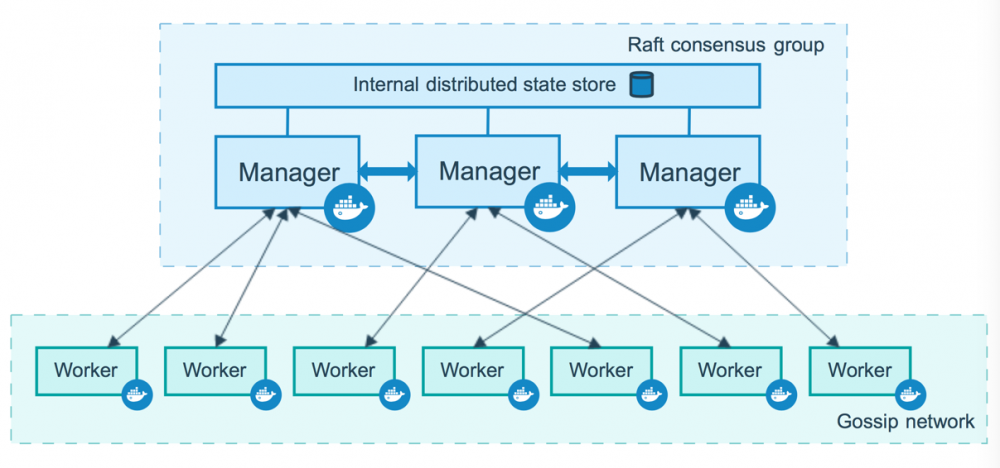

常见概念

-

节点

节点为作用在Swarm集群中的一个实例化Docker引擎。虽然可以在一台物理主机上部署多个节点,但是生产环境中都是跨物理主机进行。

节点可以加入已经存在的swarm集群或者将自己初始化为一个swarm集群。

分为:管理(manager)节点,工作(worker)节点。

- 管理节点:

执行docker swarm 命令管理 swarm 集群的节点。

一个 Swarm 集群可以有多个管理节点,但只有一个管理节点可以成为 leader,leader 通过 raft 协议实现。

管理节点下发任务(Task) 给工作节点,通过工作节点通知给它的任务(Task)执行状态来管理每个工作节点。 - 工作节点:

接收和执行来自管理节点的任务(task)。管理节点默认也作为工作节点。你也可以通过配置让服务只运行在管理节点。

- 管理节点:

-

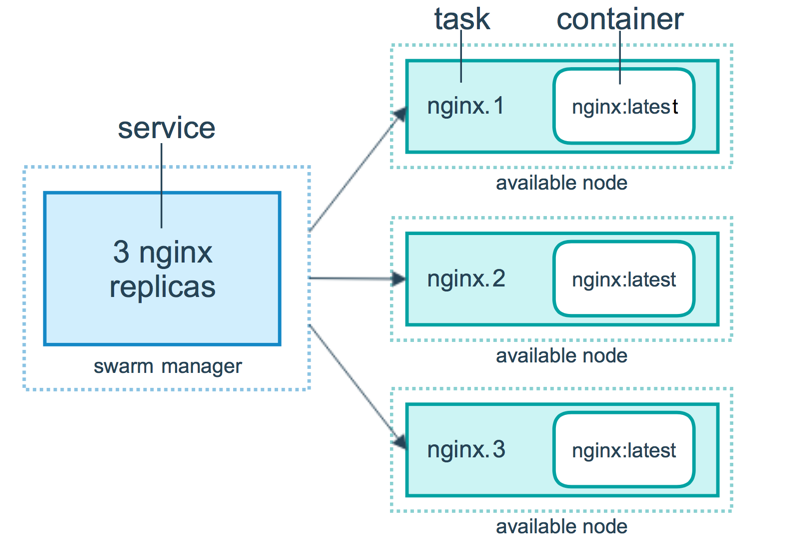

服务(Service)与任务(Task)

- 任务(Task):

Swarm 中的最小的调度单位,目前来说就是一个单一的容器。一个任务分配给一个节点之后,哪怕执行失败都无法转移到其他节点。 - 服务(Service):

运行于管理或者工作节点上的一组任务属性定义。它是Swarm的中心结构,也是与Swarm交互的主要角色。

与Docker-compose中的服务类似,包含镜像与命令等。

服务有两种模式:- replicated services:按照一定规则在各个工作节点上运行指定个数的任务。

- global services:每个工作节点上运行一个任务

- 任务(Task):

使用实例

使用 play-with-docker 实验。

-

初始化管理节点

$ docker swarm init Error response from daemon: could not choose an IP address to advertise since this system has multiple addresses on different interfaces (192.168.0.48 on eth0 and 172.18.0.53 on eth1) - specify one with --advertise-addr

因为有多个网络地址需要指定

$ docker swarm init --advertise-addr 192.168.0.48 Swarm initialized: current node (uas5ayulwp28ldrcr3bscmitr) is now a manager. To add a worker to this swarm, run the following command: docker swarm join --token SWMTKN-1-17khjeqkfmjswynhda1i0dfqw4hy5io2006xktyvav272ccfie-6xomx3u1mvm7pacj0ymheyu6e 192.168.0.48:2377 To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.查看节点列表

$ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION uas5ayulwp28ldrcr3bscmitr * node1 Ready Active Leader 19.03.4

-

加入工作节点

$ docker swarm join --token SWMTKN-1-17khjeqkfmjswynhda1i0dfqw4hy5io2006xktyvav272ccfie-6xomx3u1mvm7pacj0ymheyu6e 192.168.0.48:2377 This node joined a swarm as a worker.

在两个主机执行以上命令之后,去管理节点查看节点列表

$ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION uas5ayulwp28ldrcr3bscmitr * node1 Ready Active Leader 19.03.4 2rum5pm81t76tjv91rld1n8vu node3 Ready Active 19.03.4 u4tbyt95nhy7l6vj3z013r8wz node5 Ready Active 19.03.4

-

服务部署

(命令都是在管理节点执行。)

-

新建服务

在集群中创建nginx服务。

$ docker service create --replicas 3 -p 80:80 --name nginx nginx:latest stieixvmvfipi4w6qh49t9es0 overall progress: 3 out of 3 tasks 1/3: running 2/3: running 3/3: running verify: Service converged

-

查看服务

查看服务列表:

$ docker service ls ID NAME MODE REPLICAS IMAGE PORTS stieixvmvfip nginx replicated 3/3 nginx:latest *:80->80/tcp

查看服务详细:

$ docker service ps nginx ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS xgc5u5m5gl9p nginx.1 nginx:latest node3 Running Running 2 minutes ago y76pttbz9pkh nginx.2 nginx:latest node5 Running Running 2 minutes ago oti754af3izt nginx.3 nginx:latest node1 Running Running 2 minutes ago

浏览器中输入管理节点或者工作节点IP地址都可以查看nginx界面。

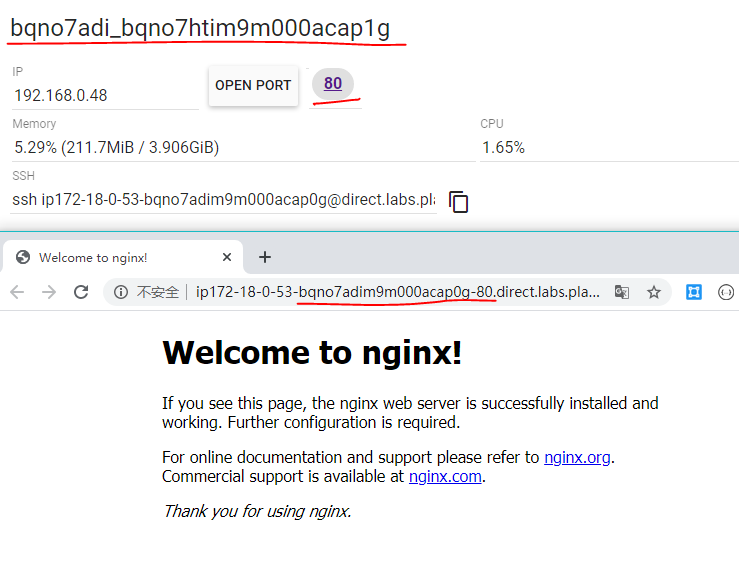

管理节点:

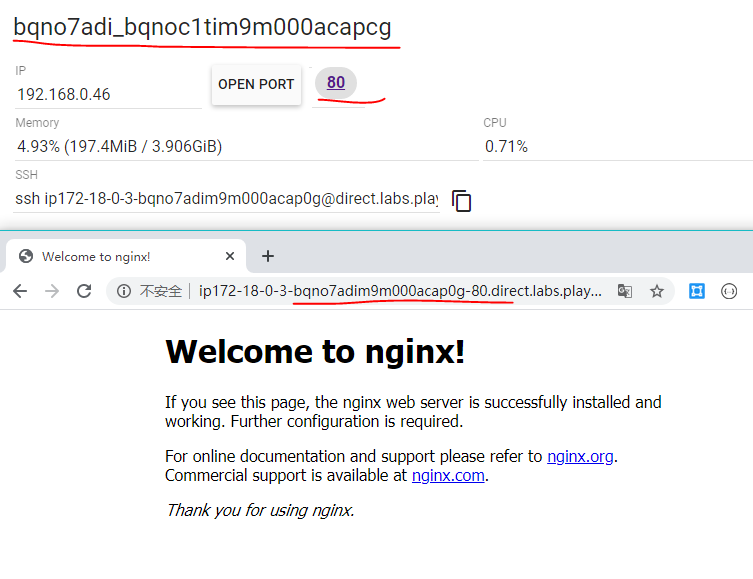

工作节点1:

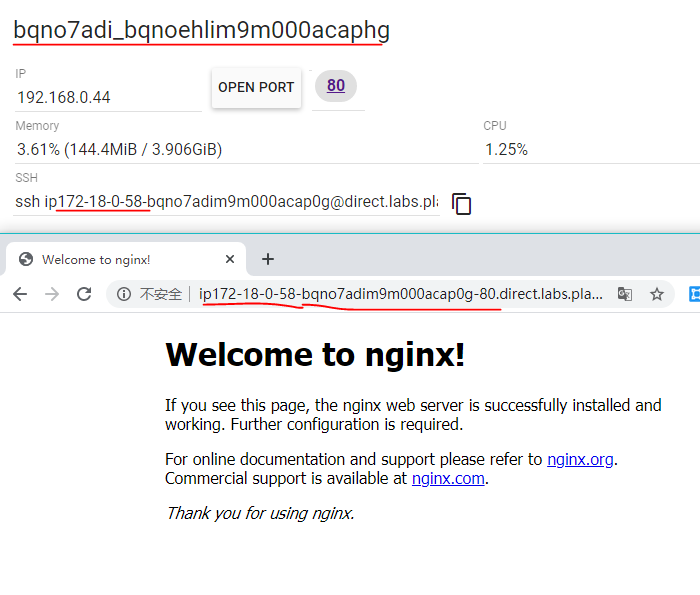

工作节点2:

-

服务伸缩

- 业务访问量少时,可以减少服务运行容器数。

$ docker service scale nginx=2 nginx scaled to 2 overall progress: 2 out of 2 tasks 1/2: running 2/2: running verify: Service converged

查看服务详情:$ docker service ls ID NAME MODE REPLICAS IMAGE PORTS stieixvmvfip nginx replicated 2/2 nginx:latest *:80->80/tcp

- 业务访问量多时,可以增加服务运行容器数。

$ docker service scale nginx=3 nginx scaled to 3 overall progress: 3 out of 3 tasks 1/3: running 2/3: running 3/3: running verify: Service converged

- 业务访问量少时,可以减少服务运行容器数。

-

删除服务

$ docker service rm nginx nginx

查看服务:

$ docker service ls ID NAME MODE REPLICAS IMAGE PORTS

-

-

通过compose文件部署服务

docker service create 一次只能部署一个服务,使用 docker-compose.yml 可以一次启动多个关联的服务

- 创建docker-compose.yml文件

version: "3" services: nginx: image: nginx:latest ports: - 80:80 deploy: mode: replicated replicas: 3 - 部署服务

yml文件通过使用docker stack部署。$ docker stack deploy -c docker-compose.yml nginx Creating network nginx_default Creating service nginx_nginx

- 查看服务

$ docker stack ls NAME SERVICES ORCHESTRATOR nginx 1 Swarm

$ docker service ls ID NAME MODE REPLICAS IMAGE PORTS 92n4xd254vu1 nginx_nginx replicated 3/3 nginx:latest *:80->80/tcp

$ docker service ps nginx_nginx ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS p6d3xibx9swx nginx_nginx.1 nginx:latest node1 Running Running 2 minutes ago v0taw0rnr9v3 nginx_nginx.2 nginx:latest node3 Running Running 2 minutes ago 2z3sbkdo16oi nginx_nginx.3 nginx:latest node5 Running Running 2 minutes ago

- 移除服务

$ docker stack down nginx Removing service nginx_nginx Removing network nginx_default

- 创建docker-compose.yml文件

- 本文标签: tab git 集群 redis 实例 Connection 删除 bug tk 协议 struct 网站 数据 Kubernetes Uber http id tag 编译 ip cat UI HTML 主机 IO 微服务 Nginx db tar 配置 App 开发 ask cache 缓存 root node 安装 TCP 参数 core ORM 架构师 web 端口 负载均衡 sql GitHub REST src Service docker-compose 目录 windows js 安全 Google value build python token cmd https apr 管理 Docker example ACE Dockerfile 部署 linux

- 版权声明: 本文为互联网转载文章,出处已在文章中说明(部分除外)。如果侵权,请联系本站长删除,谢谢。

- 本文海报: 生成海报一 生成海报二

![[HBLOG]公众号](https://www.liuhaihua.cn/img/qrcode_gzh.jpg)