Canal-Admin 集群环境配置及踩坑实录

集群配置

canal-admin的安装不再累述,可翻看之前文章,本文主要记录canal-admin集群环境的配置和踩坑记录

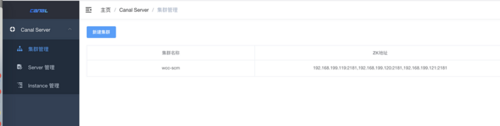

新建集群

填写zk的集群信息

集群配置参数

`#################################################

common argument

tcp bind ip

canal.ip =

register ip to zookeeper

canal.register.ip =

canal.port = 11111

canal.metrics.pull.port = 11112

canal instance user/passwd

canal.user = canal

canal.passwd = E3619321C1A937C46A0D8BD1DAC39F93B27D4458

canal admin config

canal.admin.manager = 127.0.0.1:8089

canal.admin.port = 11110

canal.admin.user = admin

canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

canal.zkServers = 192.168.199.119:2181,192.168.199.120:2181,192.168.199.121:2181

flush data to zk

canal.zookeeper.flush.period = 1000

canal.withoutNetty = false

tcp, kafka, RocketMQ

canal.serverMode = kafka

flush meta cursor/parse position to file

canal.file.data.dir = ${canal.conf.dir}

canal持久化数据到zookeeper上的更新频率

canal.file.flush.period = 1000

memory store RingBuffer size, should be Math.pow(2,n)

canal.instance.memory.buffer.size = 16384

memory store RingBuffer used memory unit size , default 1kb

canal.instance.memory.buffer.memunit = 1024

meory store gets mode used MEMSIZE or ITEMSIZE

canal.instance.memory.batch.mode = MEMSIZE

canal.instance.memory.rawEntry = true

是否开启心跳检查

canal.instance.detecting.enable = false

canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now()

心跳检查sql

canal.instance.detecting.sql = select 1

心跳检查频率

canal.instance.detecting.interval.time = 3

心跳检查失败重试次数

canal.instance.detecting.retry.threshold = 3

心跳检查失败后,是否开启自动mysql自动切换

canal.instance.detecting.heartbeatHaEnable = false

support maximum transaction size, more than the size of the transaction will be cut into multiple transactions delivery

canal.instance.transaction.size = 1024

mysql fallback connected to new master should fallback times

canal.instance.fallbackIntervalInSeconds = 60

network config

canal.instance.network.receiveBufferSize = 16384

canal.instance.network.sendBufferSize = 16384

canal.instance.network.soTimeout = 30

binlog filter config

canal.instance.filter.druid.ddl = true

忽略dcl

canal.instance.filter.query.dcl = true

canal.instance.filter.query.dml = false

忽略ddl

canal.instance.filter.query.ddl = true

是否忽略binlog表结构获取失败的异常

canal.instance.filter.table.error = false

canal.instance.filter.rows = false

canal.instance.filter.transaction.entry = false

binlog format/image check

canal.instance.binlog.format = ROW,STATEMENT,MIXED

canal.instance.binlog.image = FULL,MINIMAL,NOBLOB

binlog ddl isolation

canal.instance.get.ddl.isolation = false

parallel parser config

开启binlog并行解析模式

canal.instance.parser.parallel = true

concurrent thread number, default 60% available processors, suggest not to exceed Runtime.getRuntime().availableProcessors()

canal.instance.parser.parallelThreadSize = 16

disruptor ringbuffer size, must be power of 2

canal.instance.parser.parallelBufferSize = 256

table meta tsdb info

canal.instance.tsdb.enable = true

canal.instance.tsdb.dir = ${canal.file.data.dir:../conf}/${canal.instance.destination:}

canal.instance.tsdb.url = jdbc:h2:${canal.instance.tsdb.dir}/h2;CACHE_SIZE=1000;MODE=MYSQL;

canal.instance.tsdb.dbUsername = canal

canal.instance.tsdb.dbPassword = canal

dump snapshot interval, default 24 hour

canal.instance.tsdb.snapshot.interval = 24

purge snapshot expire , default 360 hour(15 days)

canal.instance.tsdb.snapshot.expire = 360

aliyun ak/sk , support rds/mq

canal.aliyun.accessKey =

canal.aliyun.secretKey =

destinations

canal.destinations =

conf root dir

canal.conf.dir = ../conf

auto scan instance dir add/remove and start/stop instance

canal.auto.scan = true

canal.auto.scan.interval = 5

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/h2-tsdb.xml

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml

canal.instance.global.mode = manager

canal.instance.global.lazy = false

canal.instance.global.manager.address = ${canal.admin.manager}

canal.instance.global.spring.xml = classpath:spring/memory-instance.xml

canal.instance.global.spring.xml = classpath:spring/file-instance.xml

持久化的方式主要是写入zookeeper,保证数据集群共享

canal.instance.global.spring.xml = classpath:spring/default-instance.xml

MQ

canal.mq.servers = 192.168.199.122:9092,192.168.199.123:9092,192.168.199.124:9092

重试次数

canal.mq.retries = 3

批处理

canal.mq.batchSize = 16384

canal.mq.maxRequestSize = 1048576

延时时间

canal.mq.lingerMs = 100

canal.mq.bufferMemory = 33554432

获取canal数据的批次大小

canal.mq.canalBatchSize = 50

获取canal数据的超时时间

canal.mq.canalGetTimeout = 100

ture代表json格式

canal.mq.flatMessage = true

不启动数据压缩

canal.mq.compressionType = none

主副全部成功才ack

canal.mq.acks = all

canal.mq.properties. =

canal.mq.producerGroup = test

Set this value to "cloud", if you want open message trace feature in aliyun.

canal.mq.accessChannel = local

aliyun mq namespace

canal.mq.namespace =

Kafka Kerberos Info

canal.mq.kafka.kerberos.enable = false

canal.mq.kafka.kerberos.krb5FilePath = "../conf/kerberos/krb5.conf"

canal.mq.kafka.kerberos.jaasFilePath = "../conf/kerberos/jaas.conf"

`

新建server

新建Instance

踩的坑

- 本文标签: spring https 安装 Disruptor 参数 message id mysql ip sql TCP value 文章 Master tk heartbeat zookeeper 时间 key 1111 MQ druid db 集群 Netty XML ACE IO retry tab cat NSA json Word cache Statement classpath tar 数据 Select http ORM JDBC root UI parse DDL Action bus update js 配置 解析 src producer RocketMQ

- 版权声明: 本文为互联网转载文章,出处已在文章中说明(部分除外)。如果侵权,请联系本站长删除,谢谢。

- 本文海报: 生成海报一 生成海报二

![[HBLOG]公众号](https://www.liuhaihua.cn/img/qrcode_gzh.jpg)