springboot logstash基于ELFK实现分布式日志收集系统

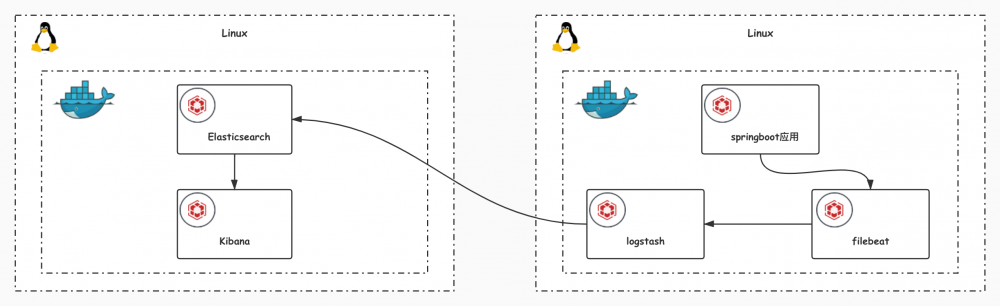

1. 分布式日志调用链路架构图

日志的收集选型采用 ELFK 来收集集群情况下的日志,通过读取应用日志文件,通过轻量日志采集器 File Beat 发送到 Logstash ,经Logstash格式化日志, 持久化存储到ElasticSearch ,通过 kibana可视化 分析展示日志数据,高速搜索排查日志信息,定位线上问题

分布式日志调用链路架构

2. springboot logstash集成

2.1 maven引入依赖

<dependency> <groupId>net.logstash.logback</groupId> <artifactId>logstash-logback-encoder</artifactId> <version>5.3</version> </dependency>

2.2 在src/main/resources目录新建logback-spring.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE configuration>

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<include resource="org/springframework/boot/logging/logback/console-appender.xml"/>

<!--日志文件保存路径-->

<property name="LOG_HOME" value="/Users/zhouxinlei/logs" />

<springProperty name="springAppName" scope="context" source="spring.application.name" />

<!-- 日志文件的路径 -->

<property name="LOG_FILE" value="${LOG_HOME}/${springAppName}.log"/>

<contextName>${springAppName}</contextName>

<!--每天记录日志到文件appender-->

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}</file>

<append>true</append>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.%d{yyyy-MM-dd}.%i.gz</fileNamePattern>

<maxHistory>7</maxHistory>

<maxFileSize>10MB</maxFileSize>

</rollingPolicy>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<timeZone>UTC</timeZone>

</timestamp>

<pattern>

<pattern>

{

"severity": "%level",

"service": "${springAppName:-}",

"trace": "%X{X-B3-TraceId:-}",

"span": "%X{X-B3-SpanId:-}",

"exportable": "%X{X-Span-Export:-}",

"pid": "${PID:-}",

"thread": "%thread",

"class": "%logger{40}",

"rest": "%message"

}

</pattern>

</pattern>

</providers>

</encoder>

</appender>

<logger name="com.apache.ibatis" level="TRACE"/>

<logger name="java.sql.Connection" level="DEBUG"/>

<logger name="java.sql.Statement" level="DEBUG"/>

<logger name="java.sql.PreparedStatement" level="DEBUG"/>

<logger name="org.springframework.kafka" level="ERROR"/>

<logger name="org.apache.kafka" level="ERROR"/>

<root level="INFO">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="FILE"/>

</root>

</configuration>

在encoder 中注入 net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder 类,格式化json日志输出到文件,ELFK收集格式化好的json日志

到此关于springboot应用的日志已经收集好归类成文件。

3. ELFK的收集

请参考 docker 安装ELFK 实现日志统计

完整的日志收集系统已经完成,后期再写一篇 基于kafka收集应用日志到ELK日志收集系统

正文到此结束

- 本文标签: UTC 安装 REST message ELK js App core iBATIS apache Logback sql value Logging Elasticsearch https IDE src IO java Statement 集群 XML ACE springboot maven 数据 Connection Service spring bug cat Docker http 分布式 tab UI provider Property 目录 Kibana json 统计 id root

- 版权声明: 本文为互联网转载文章,出处已在文章中说明(部分除外)。如果侵权,请联系本站长删除,谢谢。

- 本文海报: 生成海报一 生成海报二

热门推荐

相关文章

Loading...

![[HBLOG]公众号](https://www.liuhaihua.cn/img/qrcode_gzh.jpg)